TensorFlow provides predefined models that can be retrained to recognize certain objects in images. OpenWhisk is a serverless platform that executes functions in the cloud. With Kubernetes you can run heavy workloads in the cloud, for example the training of new TensorFlow models. Check out our open source sample that shows how to use these technologies together to recognize certain types of flowers.

Get the code from GitHub or try out the live demo.

Let me summarize how Ansgar Schmidt and I have built the sample.

TensorFlow

TensorFlow provides various predefined visual recognition models. These models can be used as starting point to build your own models to recognize your own objects in images. This technique is called Transfer Learning. We’ve chosen the MobileNet model which can be used in OpenWhisk since you need less than 512 MB RAM to run it. The codelab TensorFlow for Poets describes this functionality in more detail and we’ve used some of this code in our sample.

Object Storage

IBM provides Object Storage to store files in the cloud which can be accessed via the S3 or Swift protocols. There is a lite plan that allows running this sample without having to pay anything and without having to provide payment information. We use Object Storage to store the training set of flowers and to store and read the trained models.

Training with Kubernetes

In our sample we leverage flower images provided by Google. In order to train the model we’ve created a Docker image that extends the TensorFlow Docker image and contains code from TensorFlow for Poets. A script reads the flower images from Object Storage, triggers the training and stores the models back in Object Storage. The Docker container can be run locally or on Kubernetes. We’ve used the free lite plan available in the IBM Cloud.

Classification with OpenWhisk

In order to classify images Ansgar has built a second Docker image which extends the TensorFlow image and runs Python code to classify images against the trained model which is read from Object Storage. The Docker image can be deployed to the cloud as an OpenWhisk function. The classification of images takes less than 160 ms. Since the loading of the model takes some time, Ansgar runs this code in the ‘/init’ endpoint to leverage OpenWhisk’s caching capabilities.

Web Application and API

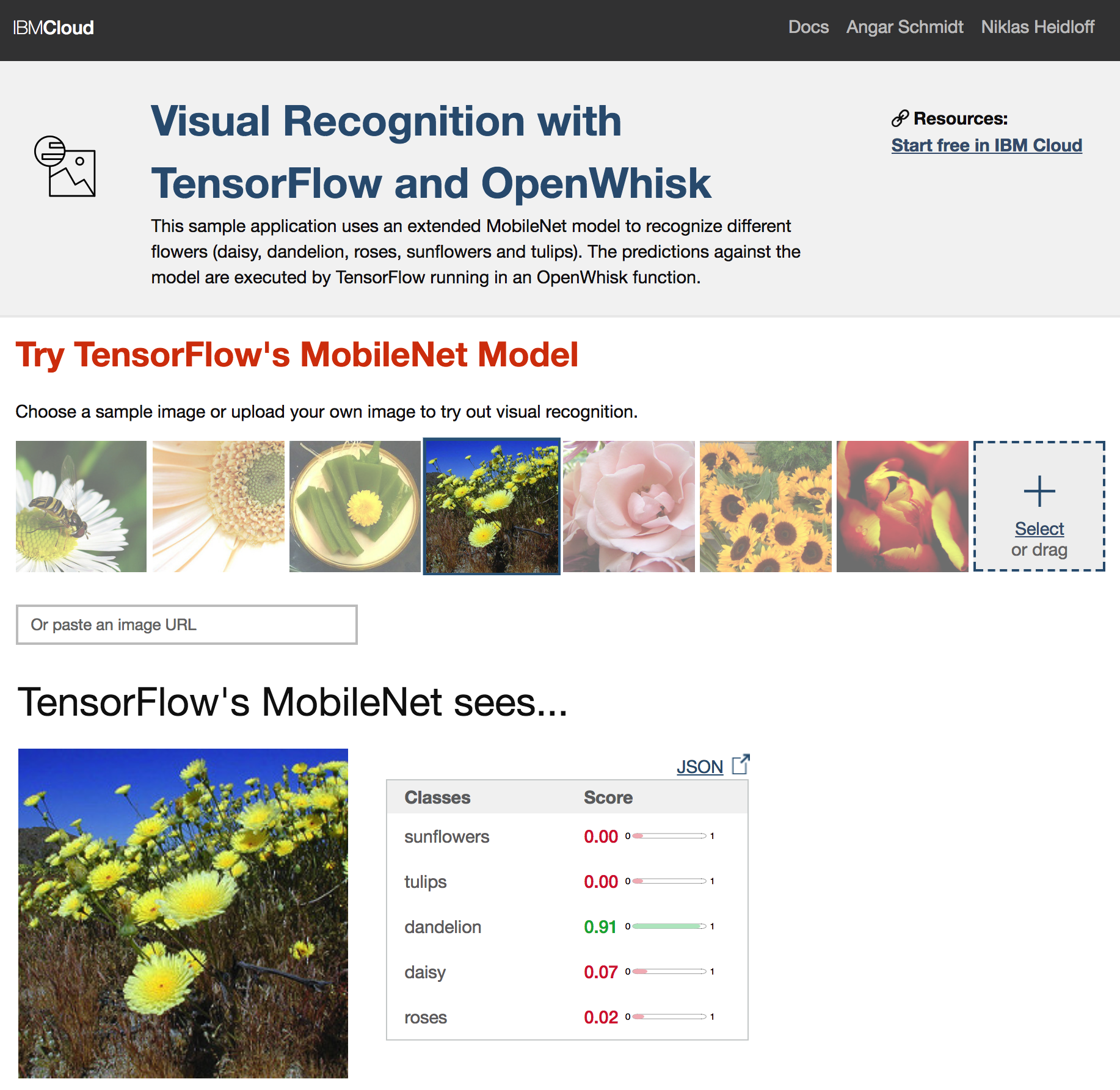

For demonstration and testing purposes we’ve built a simple API and a web application. A second OpenWhisk action receives an image via an HTTP POST request and converts it to base64 which is the format the classifier OpenWhisk action expects as input. Via an OpenWhisk sequence the API action invokes the classifier action. As a result JSON is returned containing the recognized classes and probabilities. Via the web application you can upload files and see the predictions in the UI.

Here is a screenshot of the sample application:

Ansgar Schmidt and I have blogged about this project in more detail.

- Visual Recognition with TensorFlow and OpenWhisk

- Accessing IBM Object Storage from Python

- Image Recognition with Tensorflow training on Kubernetes

- Image Recognition with Tensorflow classification on OpenWhisk

- Sample Application to classify Images with TensorFlow and OpenWhisk

Enjoy !