Here is another example how to steer Anki Overdrive cars via IBM Bluemix. In addition to a local controller, Watson speech recognition and a Kinect I’ve added gesture recognition via Leap Motion.

The sample is available as open source on GitHub. The project contains sample code that shows how to send MQTT commands to IBM Bluemix when gestures are recognized via Leap Motion.

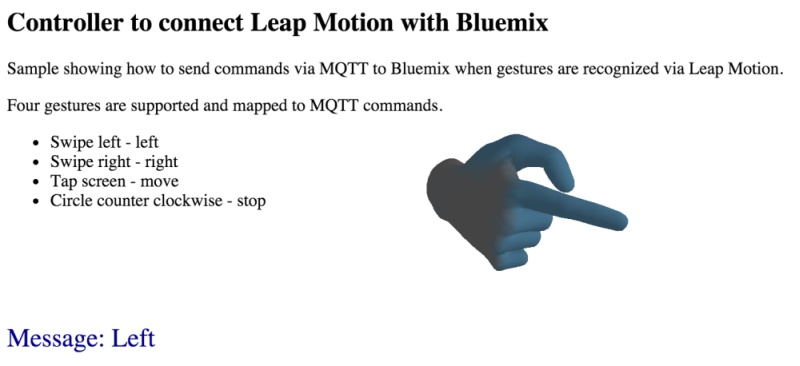

Right now four gestures can be recognized: swipe left, swipe right, screen tap and circle. The best way to explain the functionality are pictures. Check out the pictures in the screenshots directory.

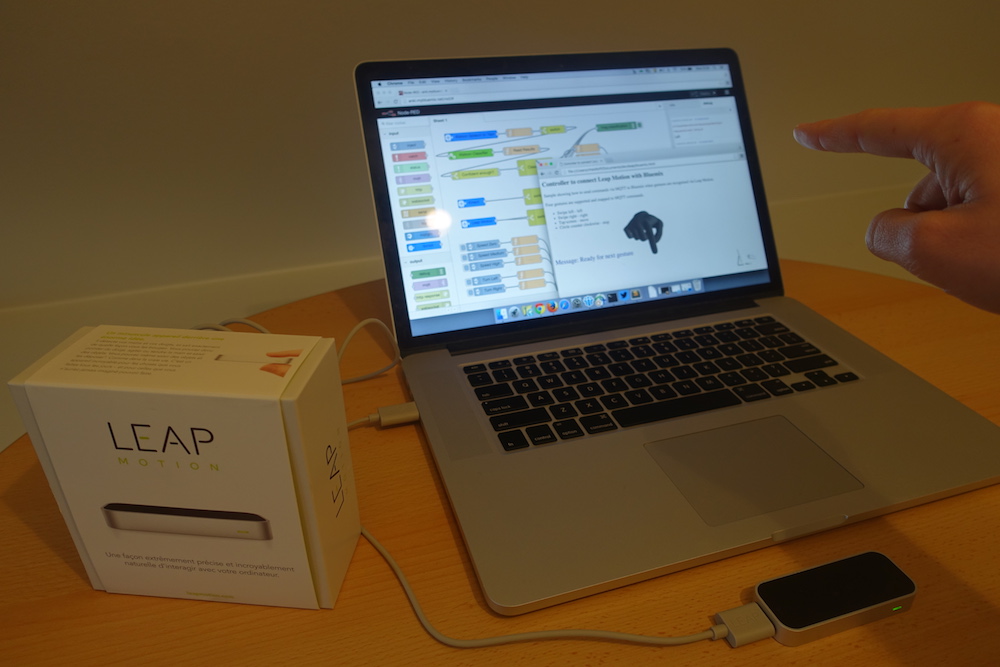

This is the device in front of a MacBook.

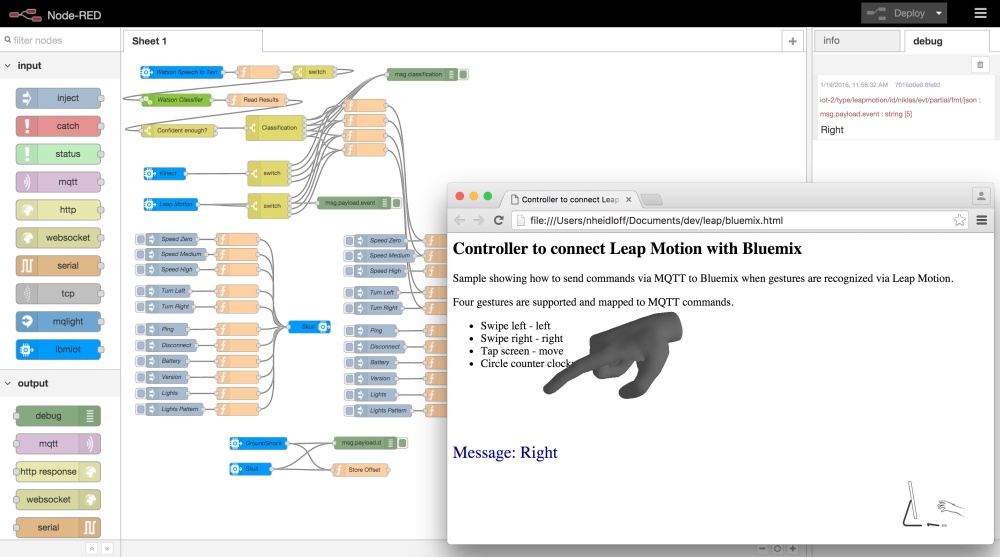

My example is basically just an HTML page with some JavaScript. One nice feature of the Leap Motion JavaScript API is the hand that shows up on the page.

I’ve extended the Node-RED flow to receive commands from Leap Motion and forward them to the cars.

The project has been implemented via the Leap Motion JavaScript SDK. MQTT messages are sent via Paho to IBM Bluemix and the Internet of Things foundation.

Here is the series of blog articles about the Anki Overdrive with Bluemix demos.