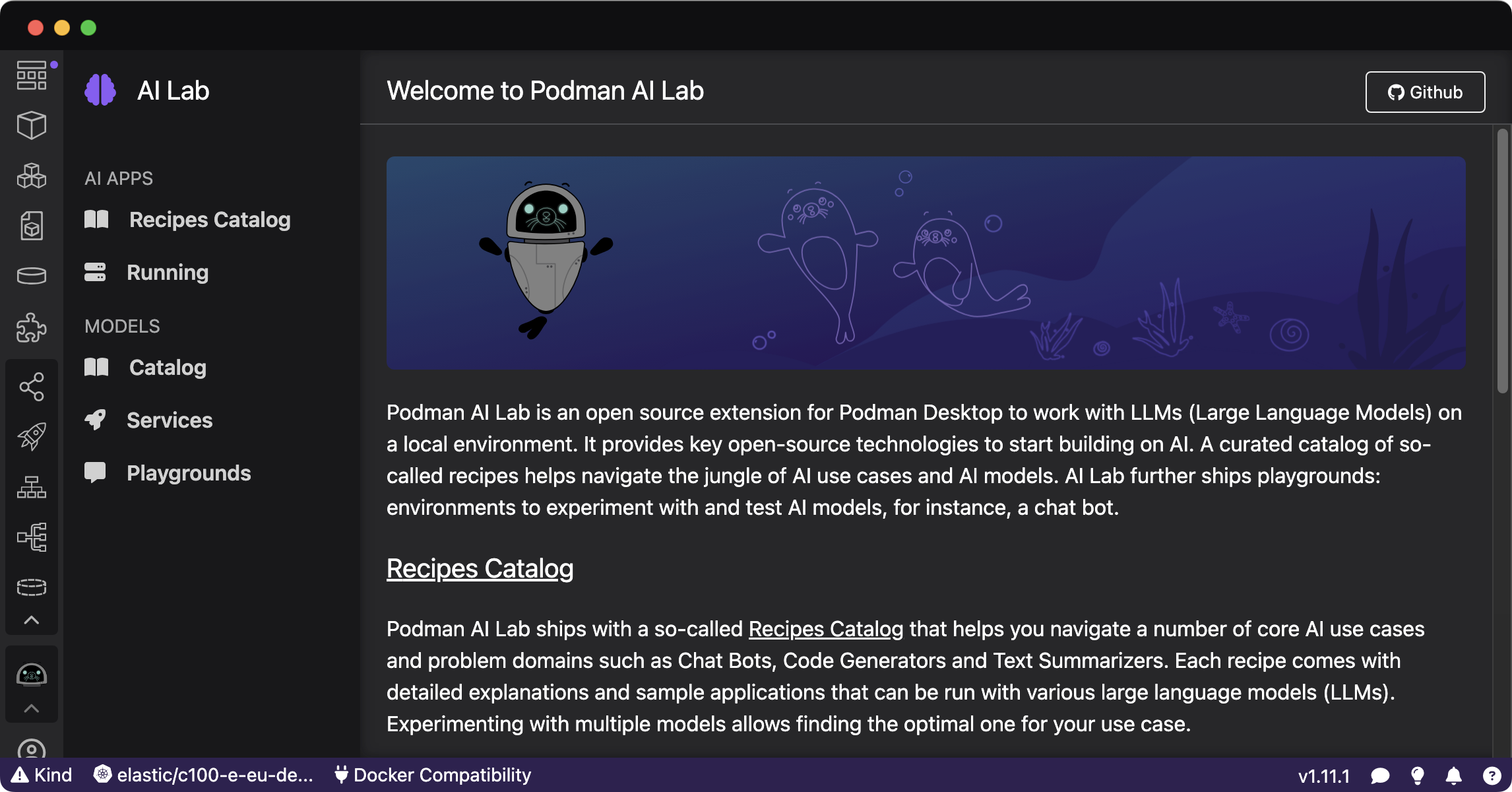

Podman Desktop is a great open source alternative to commercial offerings in order to run containers locally. With the new Podman AI Lab extension Large Language Models can be tested locally via techniques like GGUP quantization.

Developers like to write and test applications locally to be as efficient as possible. However, until recently generative AI has required GPUs in the cloud. With technologies like llama.cpp, quantization, etc. it has become much easier to utilize Large Language Models (LLMs) on modern hardware for developers like MacBook Pro machines.

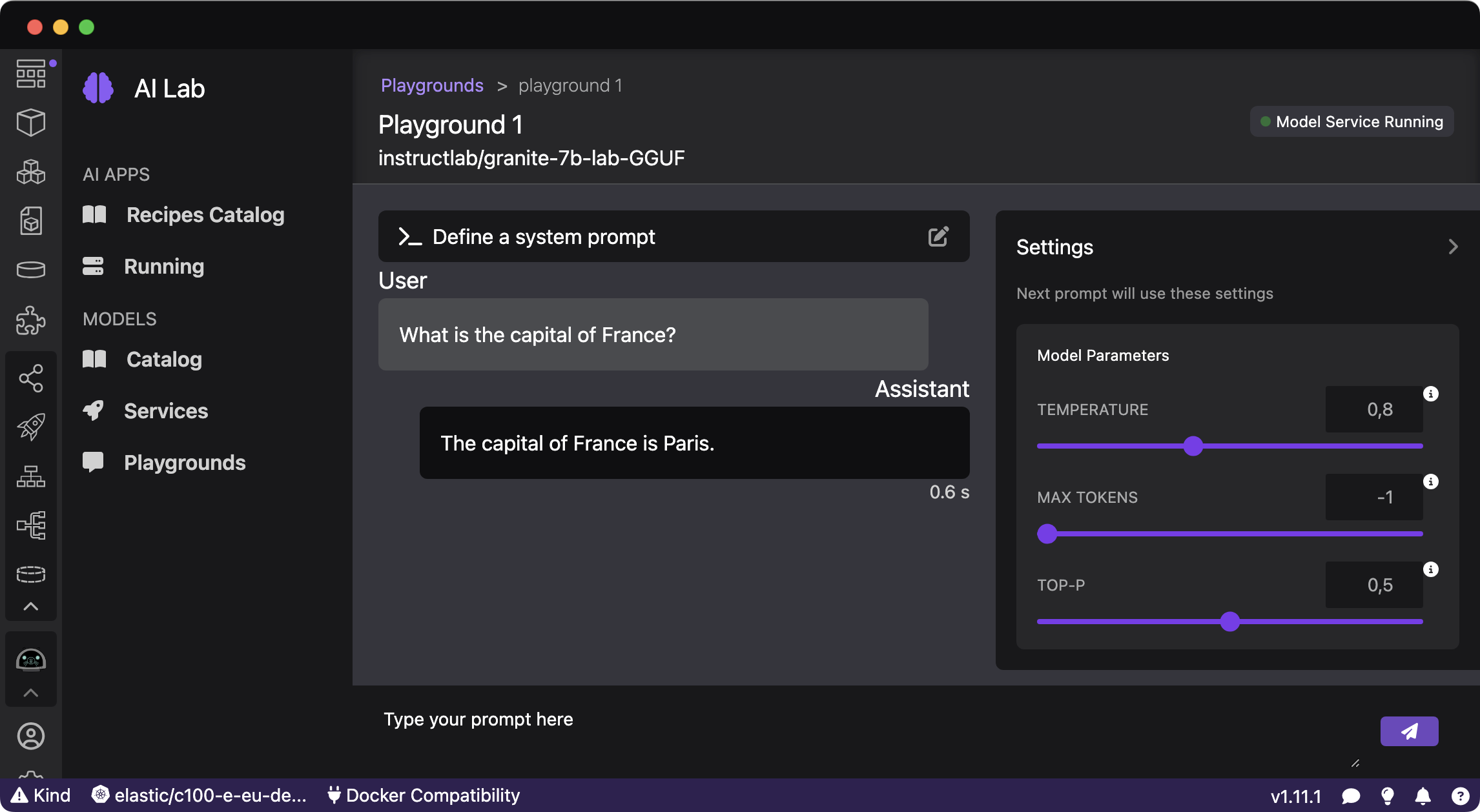

Playground

With the Podman Desktop extension AI Lab GGUP quantized models can be downloaded from HuggingFace. The HuggingFace account TheBloke provides quantized models of popular models very soon after they have been released.

With the built-in playground models can be invoked interactively.

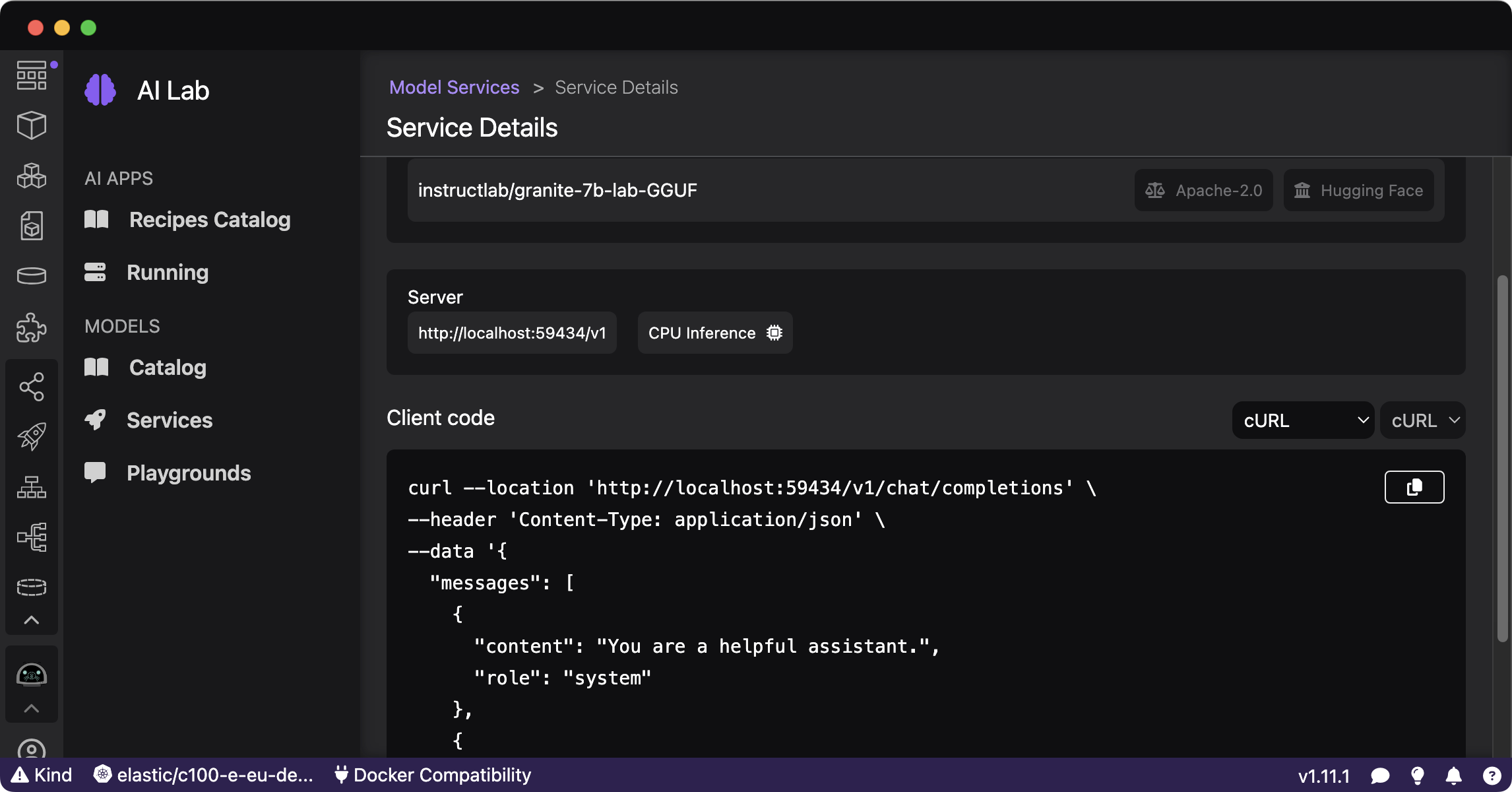

REST APIs

In addition to the playground REST APIs can be used for model inferences.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

curl --location 'http://localhost:59434/v1/chat/completions' \

--header 'Content-Type: application/json' \

--data '{

"messages": [

{

"content": "You are a helpful assistant.",

"role": "system"

},

{

"content": "What is the capital of France?",

"role": "user"

}

]

}'

Next Steps

To learn more, check out the Watsonx.ai documentation and the Watsonx.ai landing page.