Over the last months I have worked on a new initiate from IBM called Embeddable AI. You can now run some of the Watson services via containers everywhere.

Watch the short video on the Embeddable AI home page for an introduction or this interview with Rob Thomas.

Why embeddable AI?

For years, IBM Research has invested in developing AI capabilities which are embedded in IBM software offerings. We are now making the same capabilities available to our partners, providing them a simpler path to create AI-powered solutions.

While it is easy to consume software as a service, certain workloads need to be run on premises. A good example are AI applications where data must not leave certain countries. Containers are a great technology to help running AI services everywhere.

There are many resources that explain the Embeddable AI offering. Here are some of the resources that help you getting started.

- Watson NLP

- Watson NLP Helm Chart

- Watson Speech

- Automation for Watson NLP deployments

- IBM entitlement API key

My team has implemented a Helm chart which makes it easy to deploy Watson NLP in Kubernetes environments like OpenShift.

1

2

3

4

5

6

7

8

9

10

11

12

13

$ oc login --token=sha256~xxx --server=https://xxx

$ oc new-project watson-demo

$ oc create secret docker-registry \

--docker-server=cp.icr.io \

--docker-username=cp \

--docker-password=<your IBM Entitlement Key> \

ibm-entitlement-key

$ git clone https://github.com/cloud-native-toolkit/terraform-gitops-watson-nlp

$ git clone https://github.ibm.com/isv-assets/watson-automation

$ acceptLicense: true # in values.yaml

$ cp watson-automation/helm-nlp/values.yaml terraform-gitops-watson-nlp/chart/watson-nlp/values.yaml

$ cd terraform-gitops-watson-nlp/chart/watson-nlp

$ helm install -f values.yaml watson-embedded .

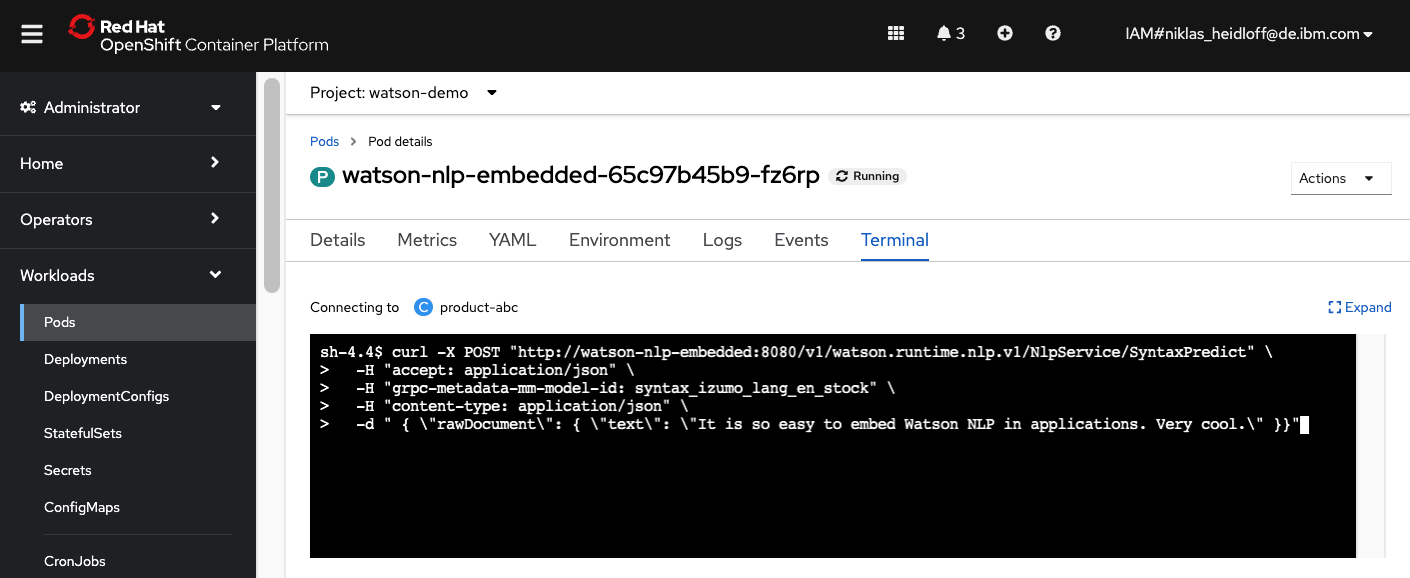

As a result you’ll see the deployed Watson NLP pod in OpenShift.

To find out more about the toolkit, check out the documentation and the sample above.