Custom Python code for agentic applications can be deployed on watsonx.ai so that various agentic applications can be managed consistently and accessed securely. There are templates to help developers and AI Engineers to get started quickly.

Watsonx.ai comes with a feature called ‘AI Service’ which allows packaging and deploying custom Python code similarly to containers. My post Deploying Agentic Applications on watsonx.ai describes the core concepts.

Watsonx.ai services like Agent Lab generate code that contains and deploys AI services. This code can be used to get started, but since everything is in one notebook, the code is not trivial to manage. To utilize code control systems and CI/CD pipelines, IBM proposes a certain project structure.

Templates

There are various templates for different agent frameworks and the list is growing.

- Base

- autogen-agent

- beeai-framework-react-agent

- crewai-websearch-agent

- langgraph-react-agent

- llamaindex-websearch-agent

- Community

- langgraph-agentic-rag

- langgraph-arxiv-research

- langgraph-tavily-tool

AI Service

The Python code needs to be wrapped in an AI Service and needs to provide the functions ‘generate’ and ‘generate_stream’. The LangGraph example comes with an example agent and an example tool.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

def deployable_ai_service(context, url = None, space_id = None, model_id = None, thread_id = None):

from typing import Generator

from langgraph_react_agent_base.agent import get_graph_closure

from ibm_watsonx_ai import APIClient, Credentials

...

client = APIClient(

credentials=Credentials(url=url, token=context.generate_token()),

space_id=space_id,

)

graph = get_graph_closure(client, model_id)

...

def generate(context) -> dict:

...

return execute_response

def generate_stream(context) -> Generator[dict, ..., ...]:

...

return generate, generate_stream

Project Structure

Projects have the following structure:

- langgraph-react-agent

- src

- langgraph_react_agent_base

- agent.py

- tools.py

- langgraph_react_agent_base

- schema

- ai_service.py

- config.toml

- pyproject.toml

- src

The ‘config.toml’ file contains the following configuration:

- API key

- Watsonx Endpoint

- Space ID

- Model ID

- Custom key/value pairs and credentials

Custom key/value pairs can be used as well as credentails.

CLI and Python

There is a CLI and Python code to deploy AI services.

1

python scripts/deploy.py

Code can be tested locally and the deployed code can be invoked remotely:

1

2

python examples/execute_ai_service_locally.py

python examples/query_existing_deployment.py

Deployment

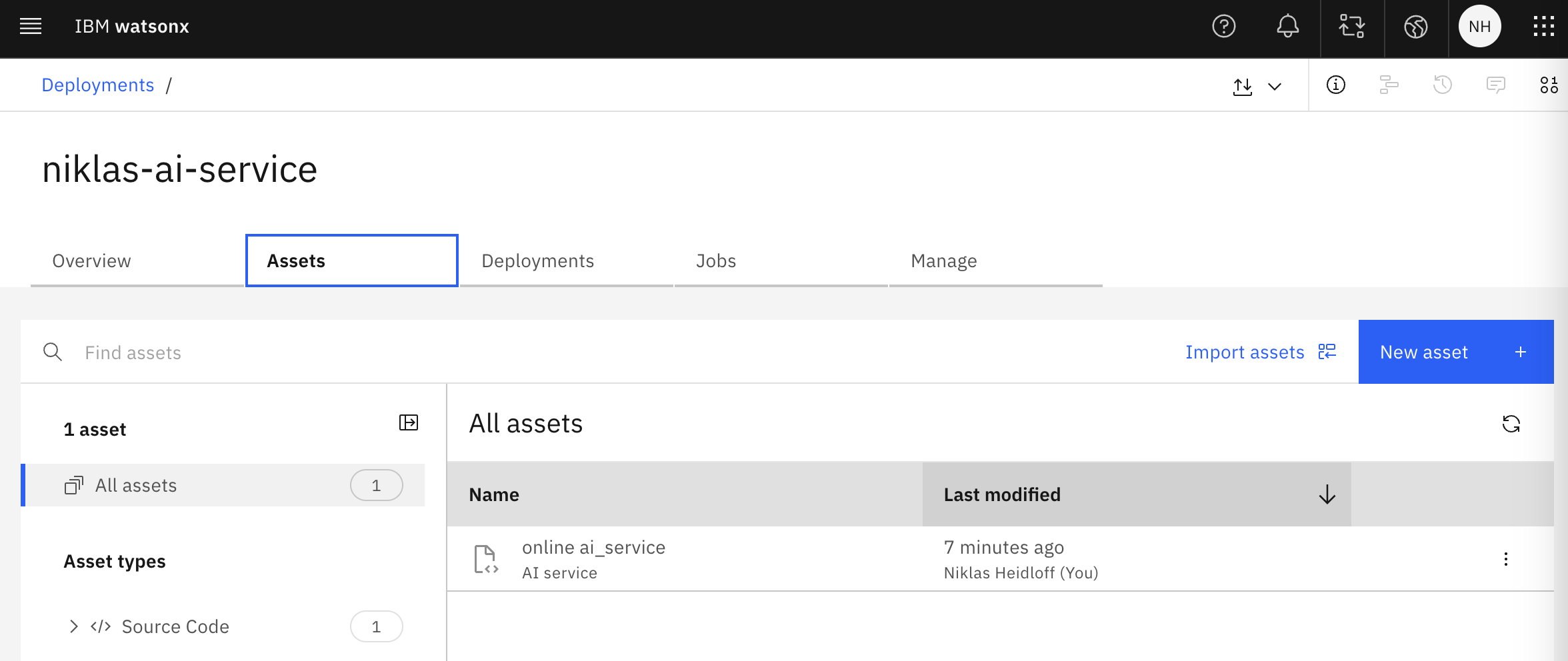

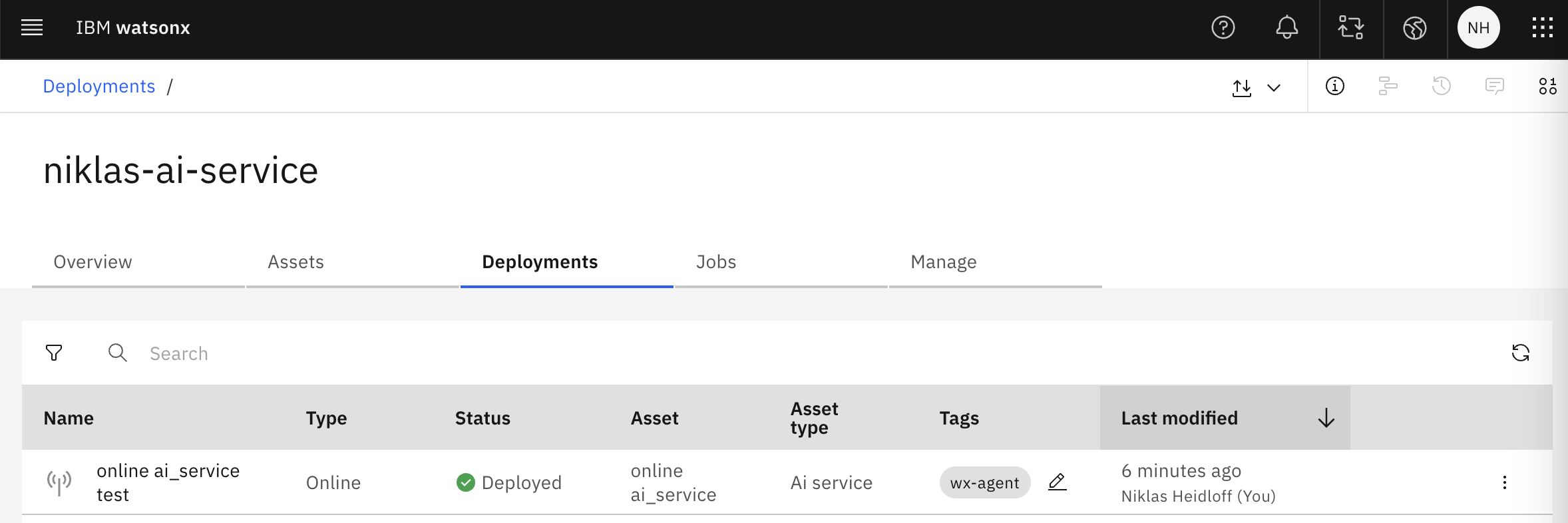

The deployment creates a code asset and the AI service.

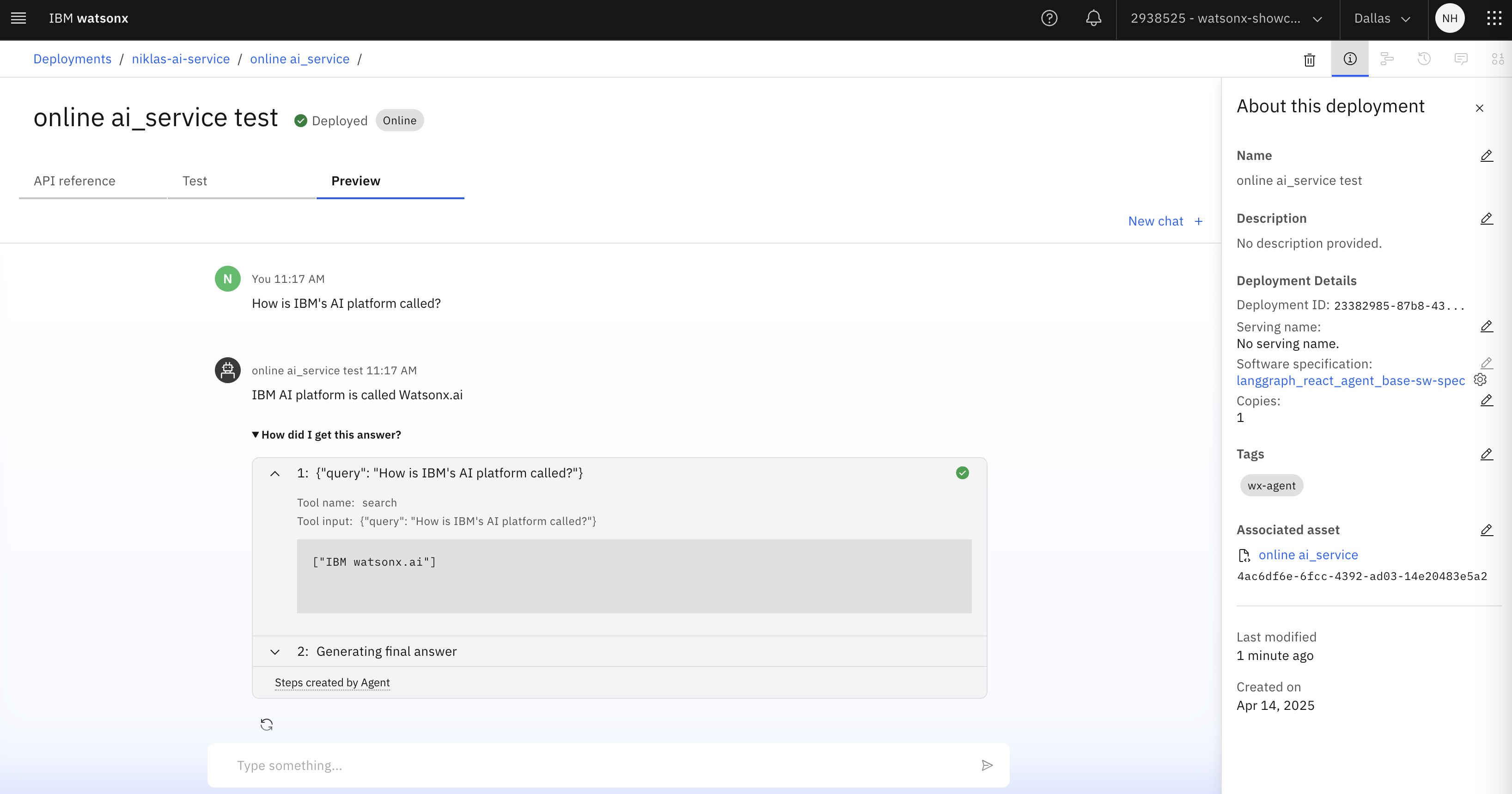

There is also a nice chat user interface to try the AI service in a playground - see the image at the top of this post.

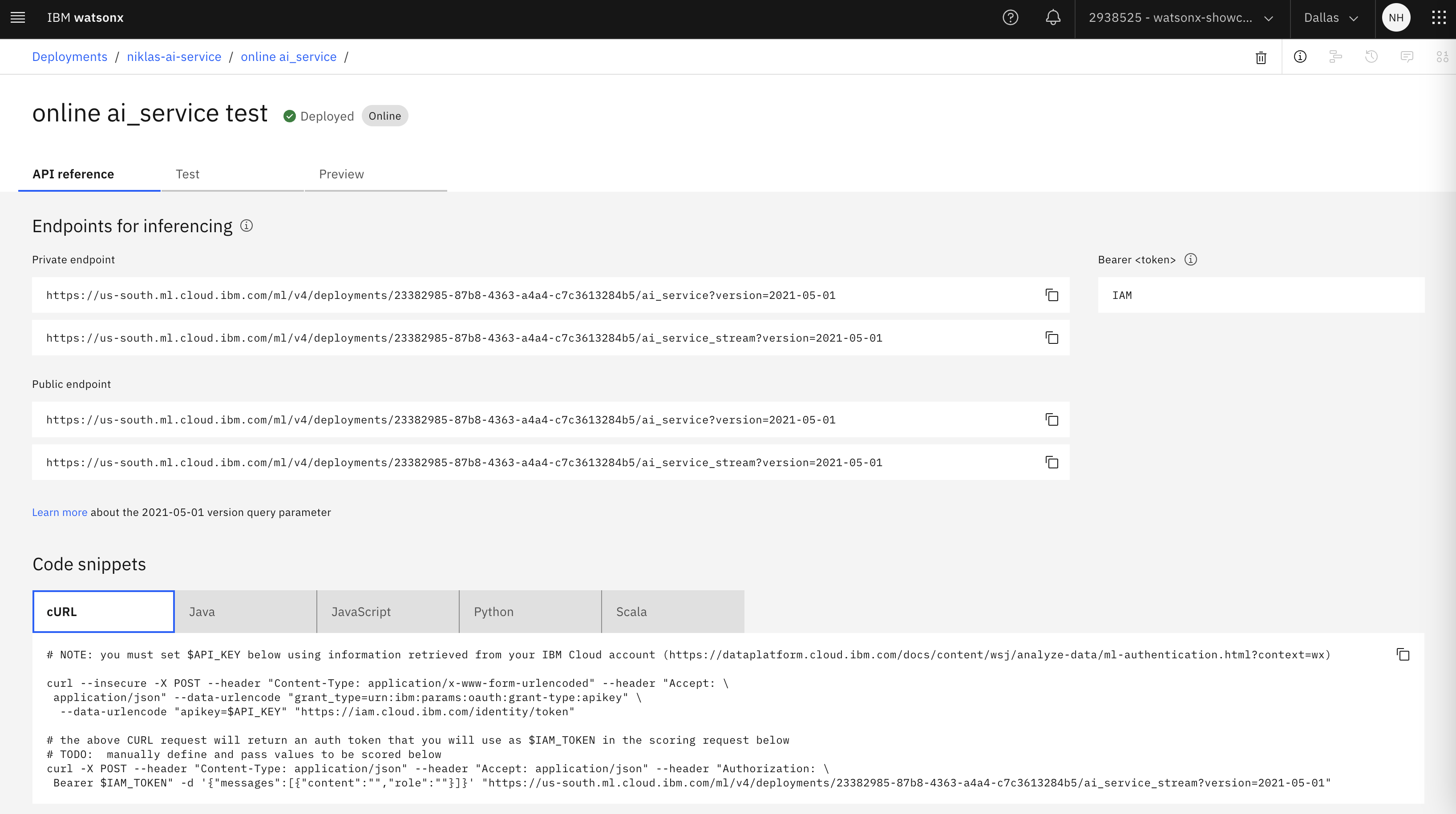

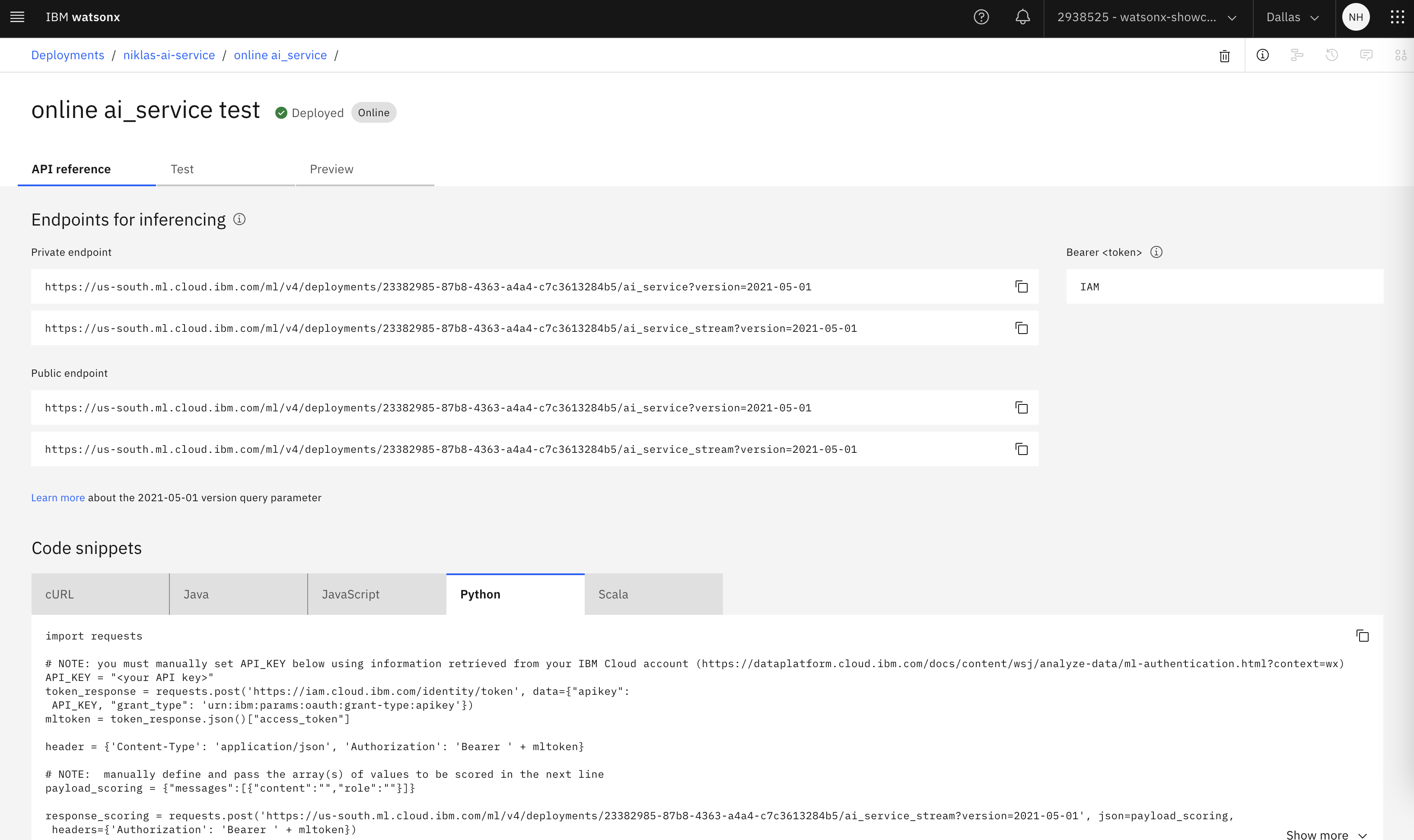

API

After the deployments endpoints are provided including authentication.

There are also examples how to invoke the endpoint from several programming languages.

Next Steps

Here are some resources:

- Deploying Agentic Applications on watsonx.ai

- Generating Agentic Applications with watsonx.ai Agent Lab

- Resource Specifications for Custom Code in watsonx.ai

- Predefined software specifications

- Predefined hardware specifications

- Customizing runtimes with external libraries and packages

To learn more, check out the Watsonx.ai documentation and the Watsonx.ai landing page.