Red Hat Enterprise Linux AI (RHEL AI) comes with InstructLab to fine tune Large Language Models. This post describes how to set it up on IBM Cloud.

Red Hat Enterprise Linux AI is a foundation model platform to seamlessly develop, test, and run Granite family large language models (LLMs) for enterprise applications.

IBM previewed at TechXchange InstructLab integrated in watsonx.ai in a multi-tenancy environment. For regulated industries you can you also run InstructLab on IBM Cloud via RHEL AI in isolated environments.

Documentation

There are several resources available. This post only explains some highlights of the process to set up everything.

- Customizations of Generative AI Models with InstructLab

- Installing RHEL AI on IBM Cloud

- Download RHEL AI Images

- Generating a custom LLM using RHEL AI

- Red Hat Enterprise Linux AI

- InstructLab Open Source

Custom Image

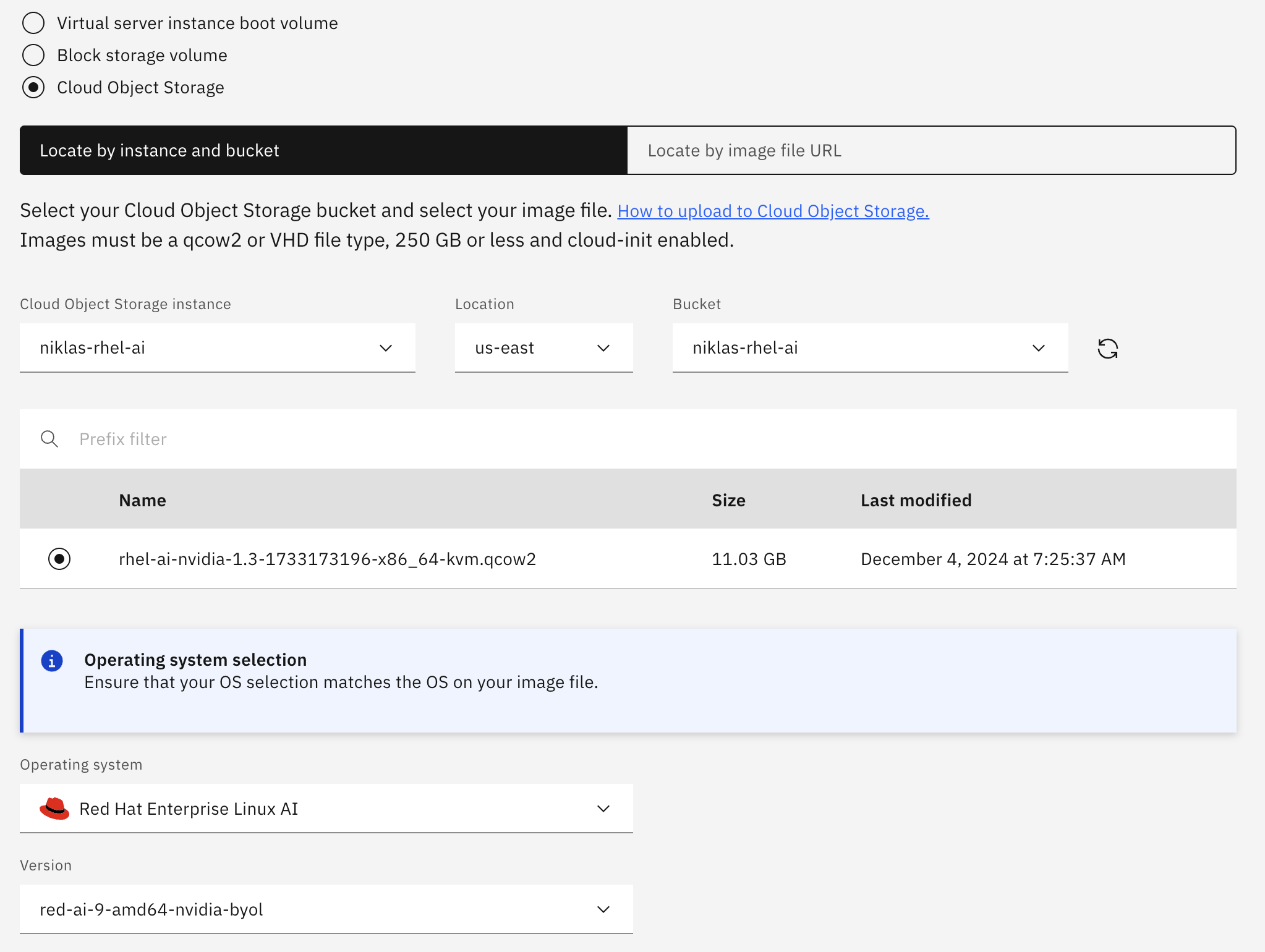

First the RHEL AI image needs to be downloaded (12 GB) and uploaded to Cloud Object Storage. Note that for the following configuration I chose the ‘qcow2’ version (rhel-ai-nvidia-1.3-1733173196-x86_64-kvm.qcow2) which is much smaller, not the RAW version.

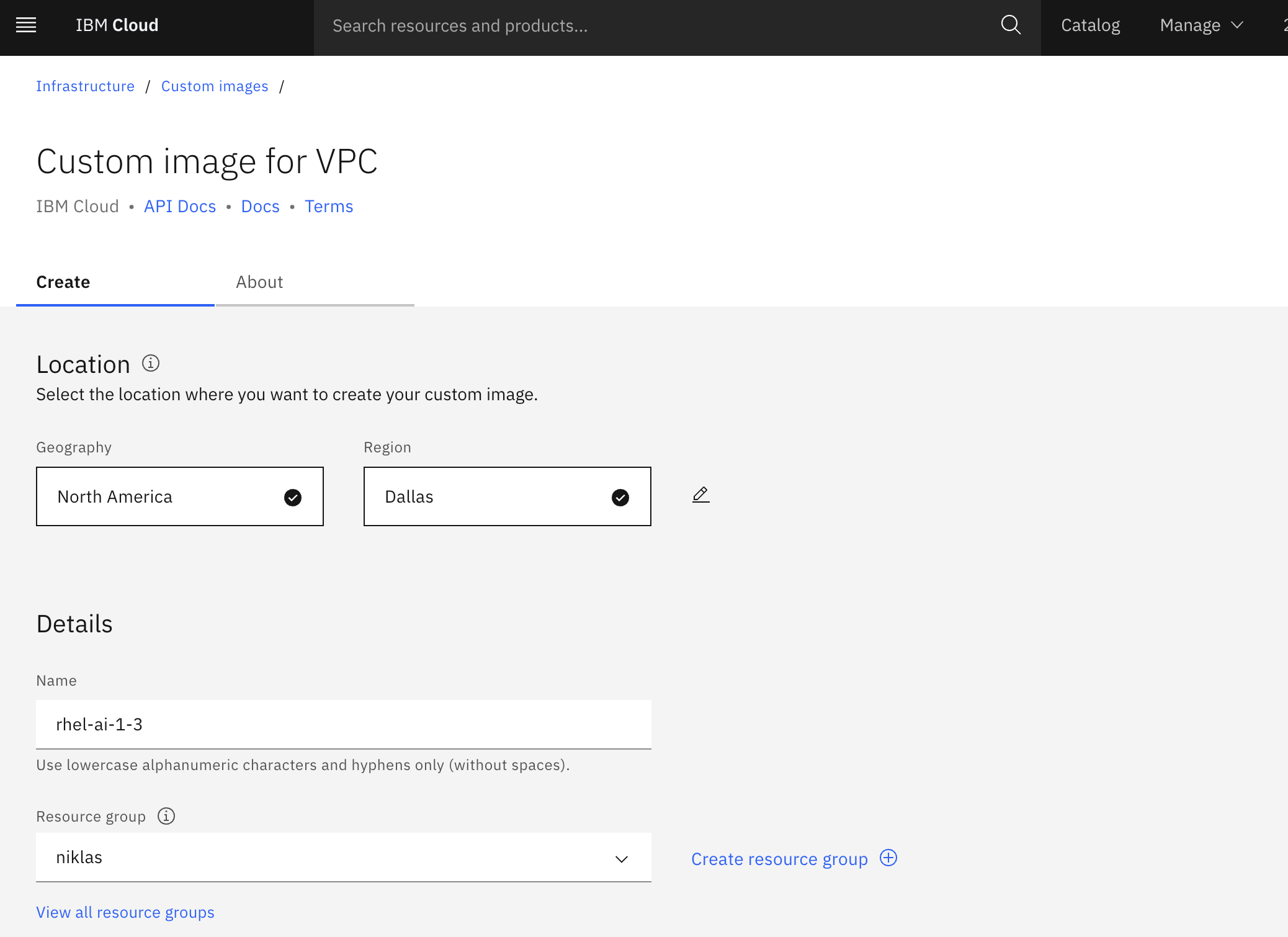

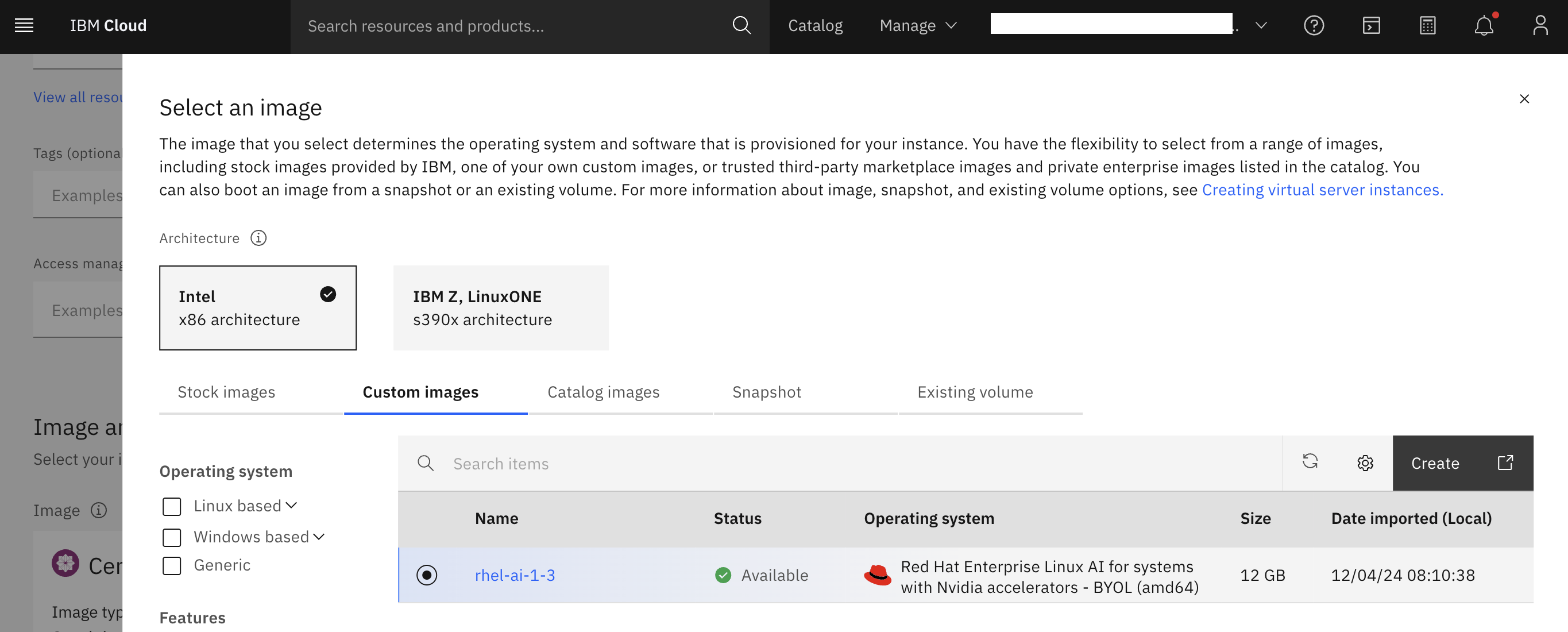

Next a custom image is defined either via API or UI.

Virtual Server Instance

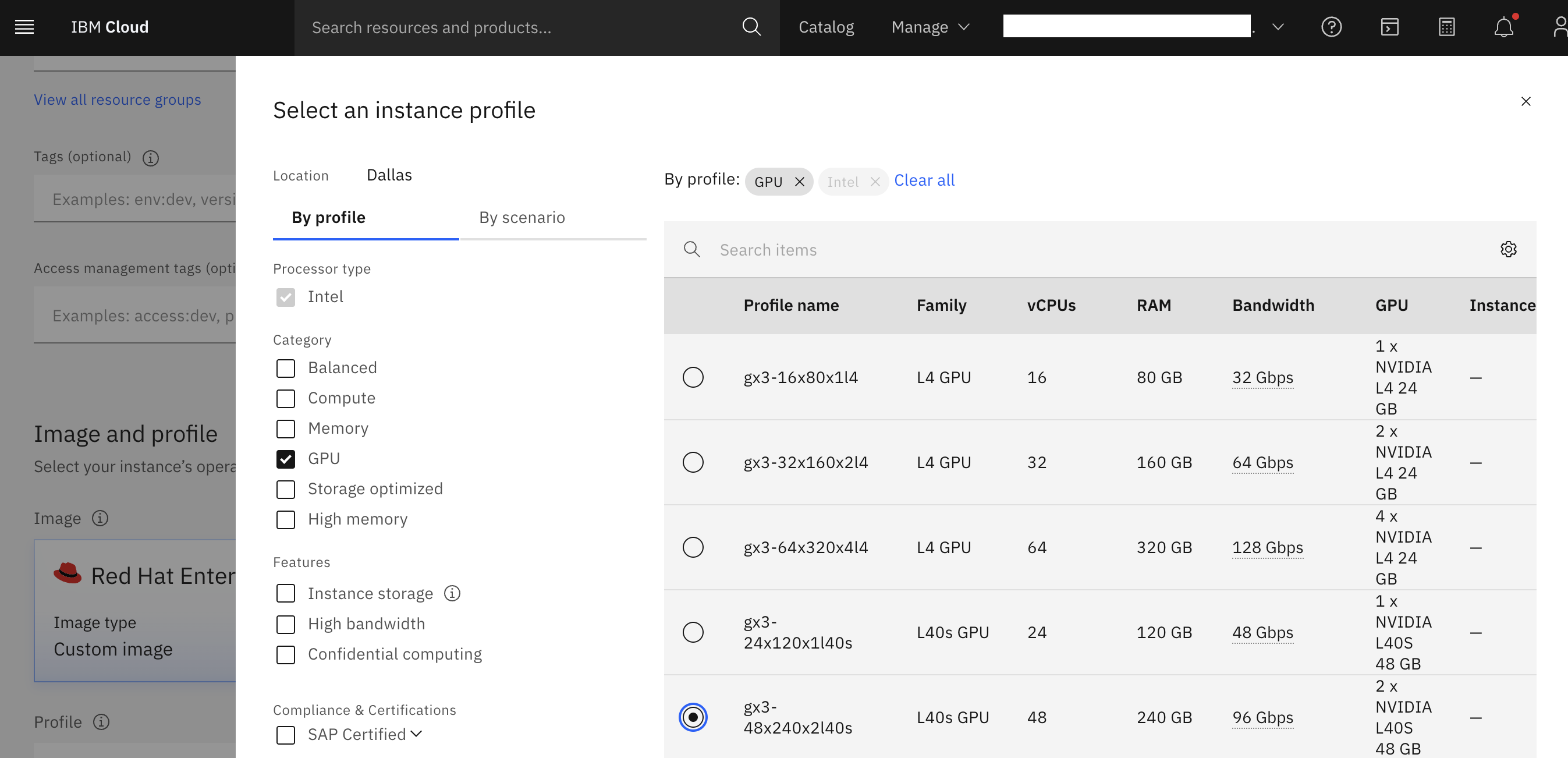

Now a new virtual server instances can be created running in a VPC.

To run a first simple sample, two L40s GPUs are attached.

InstructLab

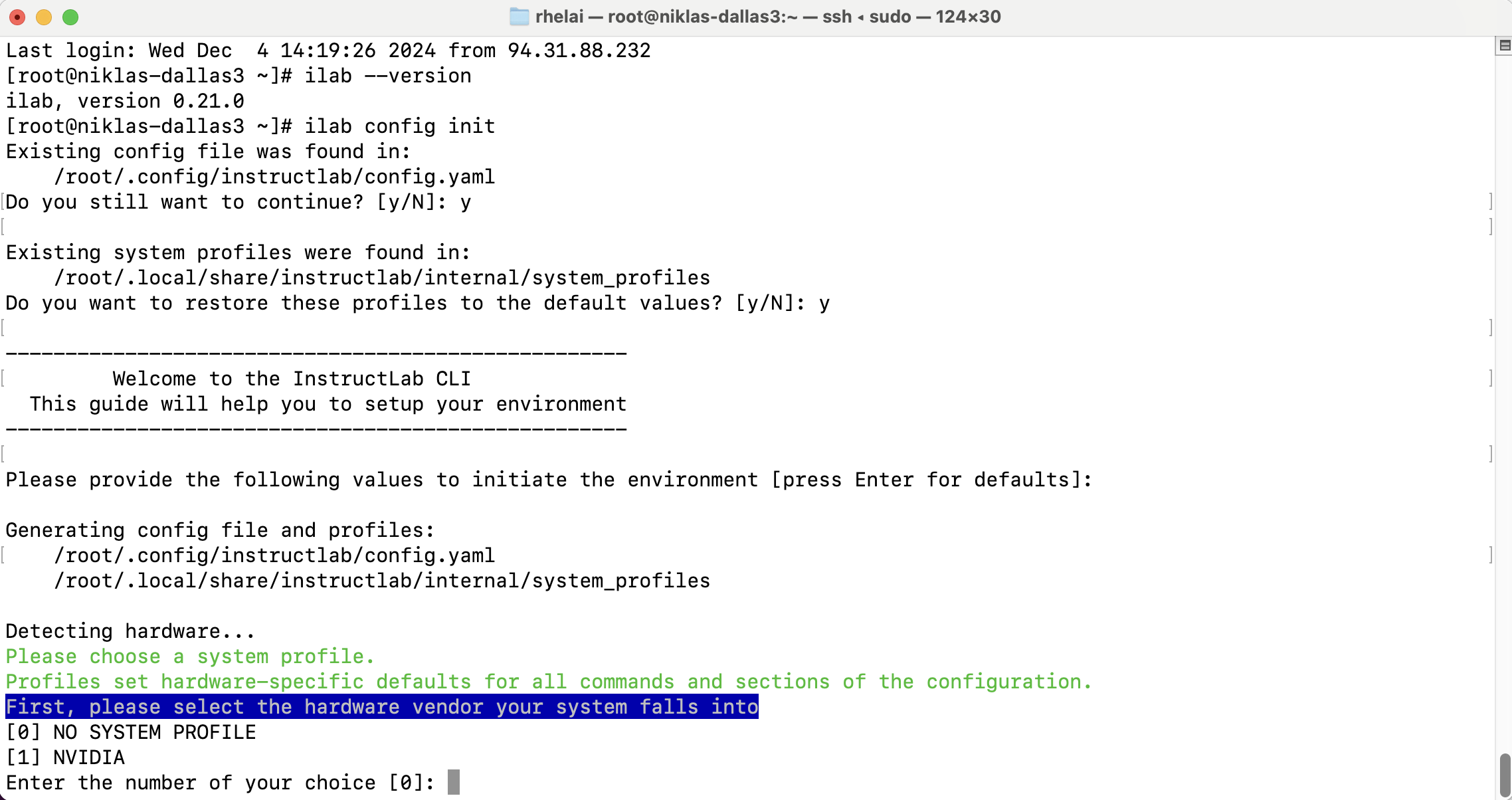

Via a floating IP and ssh the server can be accessed and InstructLab can be configured and run. Note that RHEL AI 1.3 comes with InstructLab 0.21.0.