IBM watsonx Agent Connect is a new technology to connect AI agents from any framework to IBM watsonx Orchestrate. This post explains how to build external agents and how to access them from watsonx Orchestrate.

Description of Agent Connect:

The IBM Agent Connect Framework is a foundational integration architecture designed to enable seamless connectivity between external agents and watsonx Orchestrate. Complementing this, IBM Agent Connect is a partner program that offers independent software vendors (ISVs) the opportunity to collaborate with IBM.

To allow multi-agent collaboration, agents communicate using a standardized chat completions API, which follows familiar patterns from OpenAI and other LLM providers. Agents can share tool calling details with other agents using Agent Connect events. Agents can stream their intermediate thinking steps and tool calls, providing visibility into their reasoning process and enabling more effective collaboration.

Within the Agent Connect framework, both tool calls and tool call responses can be shared among agents. This form of observability allows one agent to leverage the work performed by another agent. For instance, a CRM agent might retrieve account information and store details in a tool call response. Subsequently, an Email agent can utilize this information to find critical details, such as an email address for an account. By sharing tool calls and tool call responses, agents can collaborate on sub-tasks while sharing contextual information essential to the overall success of the main task.

Example

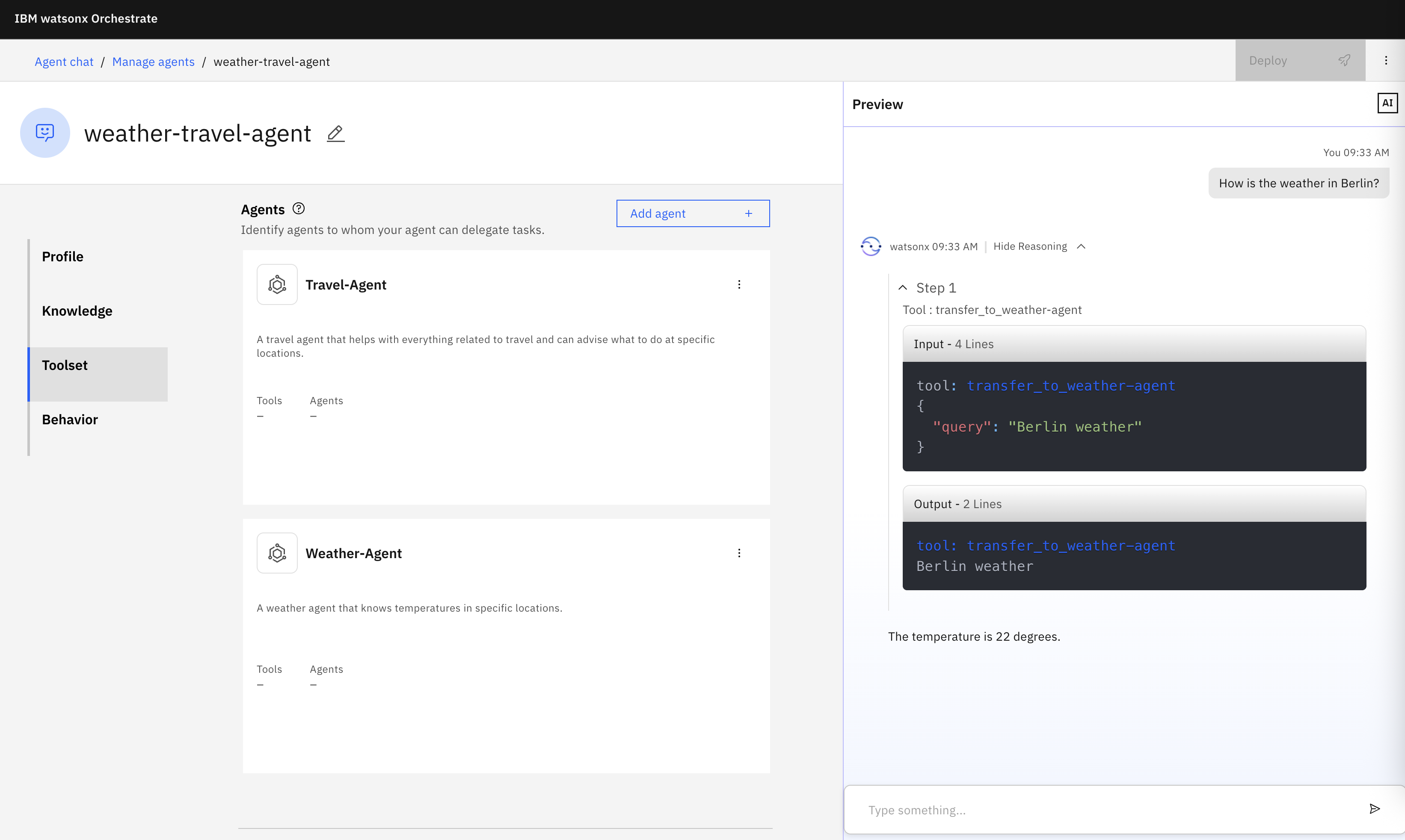

As example a ‘Weather and Travel’ agent invokes two external agents: 1. weather and 2. travel. The external agents are hosted on IBM Code Engine and implement the Agent Connect ‘/v1/chat’ endpoint.

Example conversations:

- User: How is the weather in Berlin?

- Agent: 22 degrees

- User: What can I do in Berlin?

- Agent: Check out Brandenburger Tor

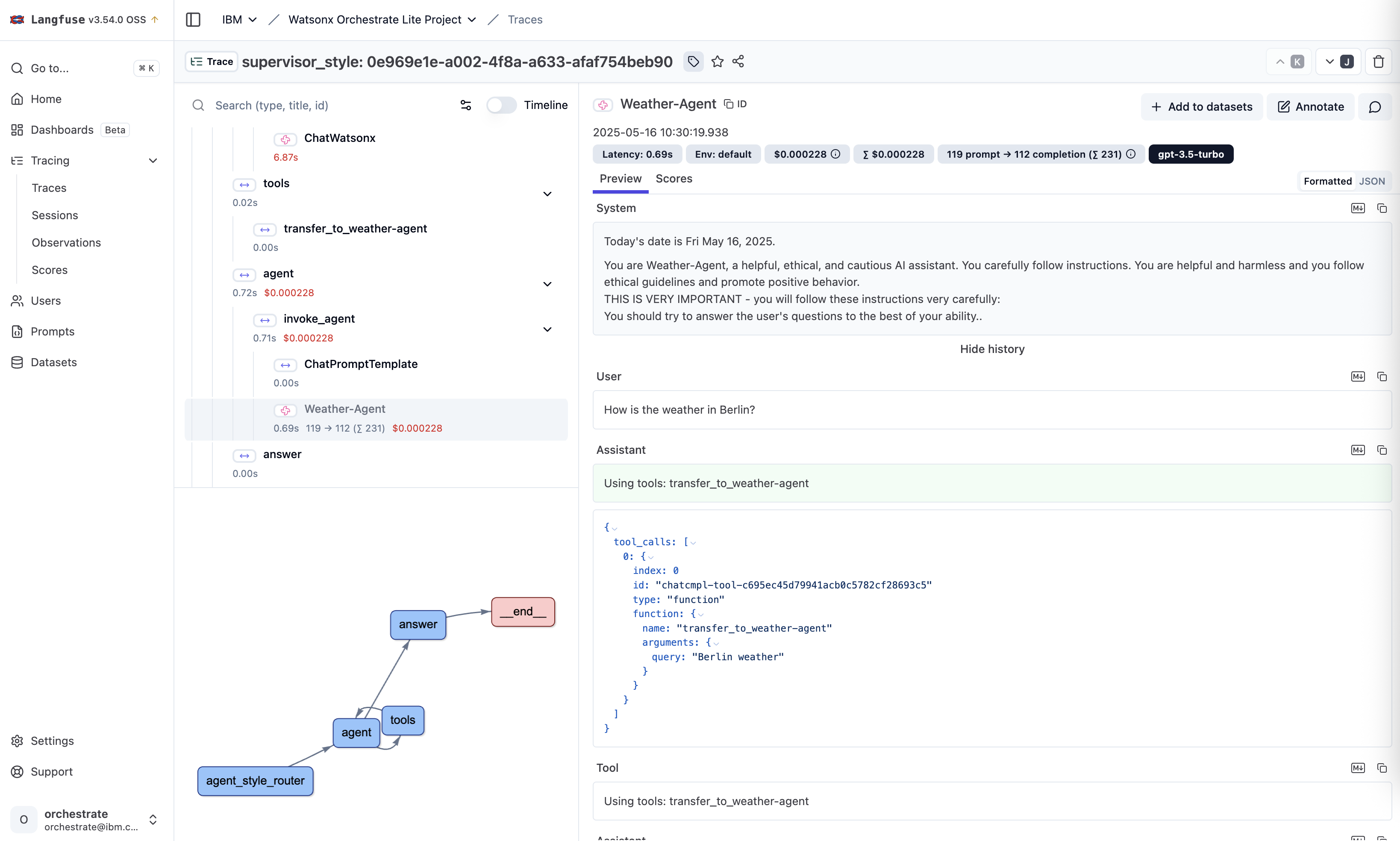

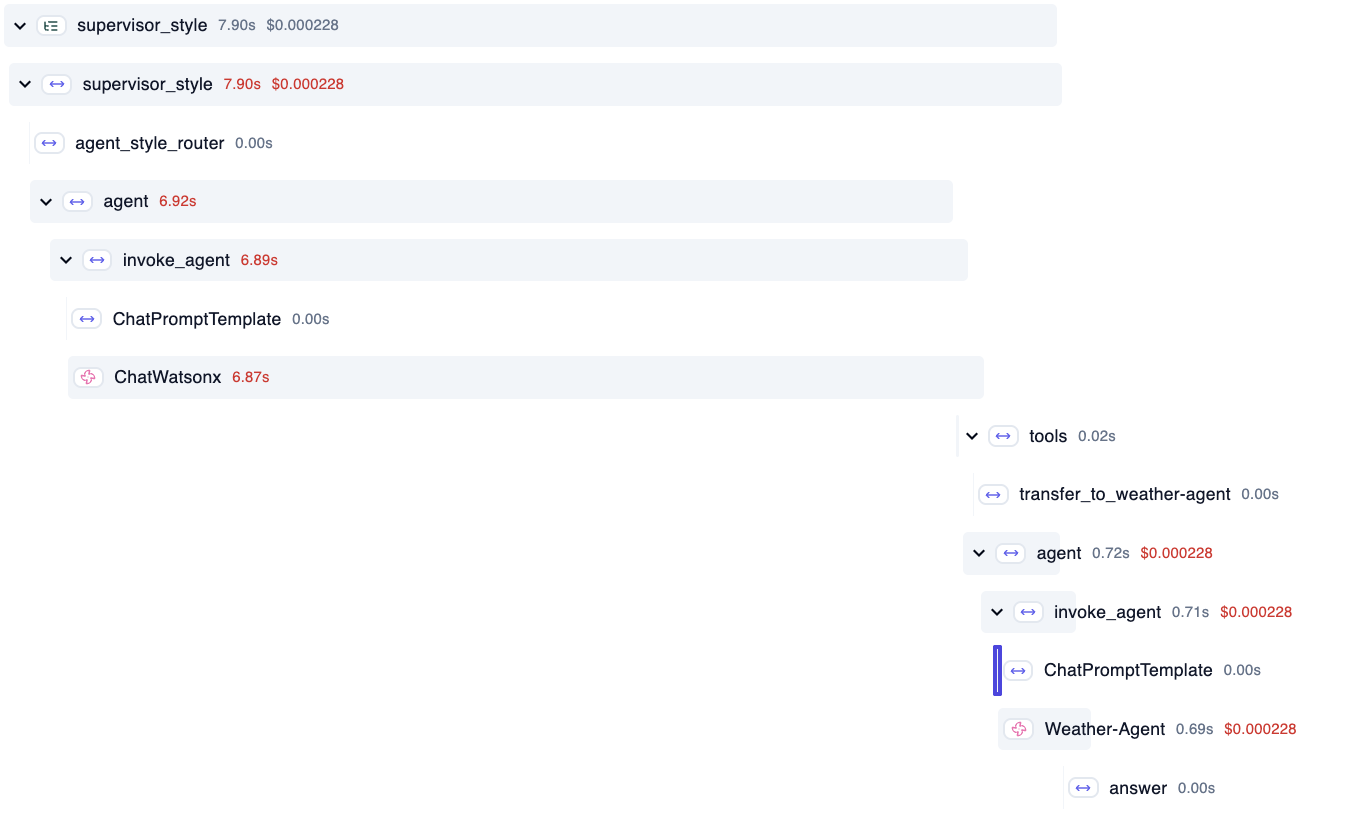

In Langfuse you can see the internal flow of steps.

How to read the traces:

- The first agent is the Orchestrate ‘main’/’orchestrator’ agent

- The tool is the collaborator agent in Orchestrate

- The second agent is the external weather agent

Intermediate Steps

The OpenAI API ‘v1/chat/completions’ API is currently the de-facto standard for LLM invocations and it is being evolved with agentic capabilities.

In addition to the final answers from agents, Agent Connect also defines how to return intermediate steps to share tool call responses. External agents get this context passed into the ‘v1/chat’ endpoint.

The weather agent returns the following events:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

event: thread.run.step.delta

data: {"id":"step-zmwubkwtgjt","object":"thread.run.step.delta","thread_id":"default","model":"agent-model","created":1747297317,"choices":[{"delta":{"role":"assistant",

"step_details":{"type":"thinking",

"content":"Analyzing the request and determining if tools are needed..."}}}]}

event: thread.run.step.delta

data: {"id":"step-nt8u1ryqdi","object":"thread.run.step.delta","thread_id":"default","model":"agent-model","created":1747297317,"choices":[{"delta":{"role":"assistant",

"step_details":{"type":"tool_calls","tool_calls":[{"id":"tool_call-ndzmgyk0n9a",

"name":"get_weather","args":{"location":"Berlin"}}]}}}]}

event: thread.run.step.delta

data: {"id":"step-21tvbj0jva6","object":"thread.run.step.delta","thread_id":"default","model":"agent-model","created":1747297317,"choices":[{"delta":{"role":"assistant",

"step_details":{"type":"tool_response","content":

"{\"location\":\"Berlin\",\"temperature\":22,\"condition\":\"sunny\",\"humidity\":45,\"unit\":\"celsius\"}","name":"get_weather","tool_call_id":"tool_call-ndzmgyk0n9a"}}}]}

event: thread.message.delta

data: {"id":"msg-r0jbgrc2ppo","object":"thread.message.delta","thread_id":"default","model":"granite","created":1747297317,

"choices":[{"delta":{"role":"assistant","content":"The temperature is 22 degrees."}}]}

Weather Agent

The weather agent returns the final answer plus the tool call response.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

const express = require('express');

const app = express();

app.use(express.json());

const PORT = process.env.PORT || 3005;

app.listen(PORT, () => {

console.log(`weather-agent.js server running on port ${PORT}`);

});

app.post('/v1/chat', async (req, res) => {

try {

const { messages, stream = false } = req.body;

console.log("Niklas weather-agent.js v6 /v1/chat input: messages")

for (message of messages) {

console.log(message)

toolCalls = JSON.stringify(message.tool_calls)

if (toolCalls) console.log("Details tool_calls: " + toolCalls)

}

const threadId = req.headers['x-thread-id'] || 'default';

if (stream) {

res.setHeader('Content-Type', 'text/event-stream');

res.setHeader('Cache-Control', 'no-cache');

res.setHeader('Connection', 'keep-alive');

await streamResponseWithTools(res, messages, threadId, tools, toolFunctions);

} else {

const response = await processMessagesWithTools(messages, threadId, tools, toolFunctions);

res.json(response);

}

} catch (error) {

console.error('Error processing chat request:', error);

res.status(500).json({ error: 'Internal server error' });

}

});

app.get('/v1/agents', (req, res) => {

console.log("Niklas weather-agent.js /v1/agents invoked")

res.json({

agents: [

{

name: 'Weather Agent',

description: 'A weather agent that knows temperatures in specific locations.',

provider: {

organization: 'Niklas Heidloff',

url: 'https://heidloff.net'

},

version: '1.0.0',

capabilities: {

streaming: true

}

}

]

});

});

function generateId() {

return Math.random().toString(36).substring(2, 15);

}

const tools = [

{

type: "function",

function: {

name: "get_weather",

description: "Get the current weather for a location",

parameters: {

type: "object",

properties: {

location: {

type: "string",

description: "The city and state or country (e.g., 'San Francisco, CA')"

},

unit: {

type: "string",

enum: ["celsius", "fahrenheit"],

description: "The unit of temperature"

}

},

required: ["location"]

}

}

}

];

const toolFunctions = {

get_weather: async (args) => {

const { location, unit = "celsius" } = args;

const weatherData = {

location,

temperature: unit === "celsius" ? 22 : 72,

condition: "sunny",

humidity: 45,

unit

};

return JSON.stringify(weatherData);

}

};

async function processMessagesWithTools(messages, threadId, tools, toolFunctions) {

try {

assistantResponse = {

"role": "assistant",

"content": null,

"tool_calls": [{

"id": `tool_call-${Math.random().toString(36).substring(2, 15)}`,

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\n\"location\": \"Berlin\"\n}"

}

}]

}

if (assistantResponse.tool_calls && assistantResponse.tool_calls.length > 0) {

const updatedMessages = [...messages, assistantResponse];

for (const toolCall of assistantResponse.tool_calls) {

const { id, function: { name, arguments: argsString } } = toolCall;

try {

const args = JSON.parse(argsString);

const result = await toolFunctions[name](args);

updatedMessages.push({

role: "tool",

tool_call_id: id,

name: name,

content: result

});

} catch (error) {

console.error(`Error executing tool ${name}:`, error);

updatedMessages.push({

role: "tool",

tool_call_id: id,

name: name,

content: JSON.stringify({ error: error.message })

});

}

}

content = updatedMessages[updatedMessages.length - 1].content

contentJSON = JSON.parse(content)

const finalResponse = {

"id": "xxxxxasdrfawe235235adf",

"object": "chat.completion",

"created": 1741569952,

"model": "granite",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The temperature is " + contentJSON.temperature + " degrees.",

"refusal": null,

"annotations": []

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 19,

"completion_tokens": 10,

"total_tokens": 29,

"prompt_tokens_details": {

"cached_tokens": 0,

"audio_tokens": 0

},

"completion_tokens_details": {

"reasoning_tokens": 0,

"audio_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

}

},

messages: updatedMessages

}

console.log("Niklas weather-agent.js v6 /v1/chat before ouput: messages")

for (message of updatedMessages) {

console.log(message)

toolCalls = JSON.stringify(message.tool_calls)

if (toolCalls) console.log("Details tool_calls: " + toolCalls)

}

return {

id: finalResponse.id,

object: 'chat.completion',

created: finalResponse.created,

model: finalResponse.model,

choices: finalResponse.choices,

usage: finalResponse.usage

};

} else {

return llmResponse;

}

} catch (error) {

console.error("Error in processMessagesWithTools:", error);

throw error;

}

}

async function streamResponseWithTools(res, messages, threadId, tools, toolFunctions) {

try {

const thinkingStep = {

id: `step-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.run.step.delta',

thread_id: threadId,

model: 'agent-model',

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

step_details: {

type: 'thinking',

content: 'Analyzing the request and determining if tools are needed...'

}

}

}

]

};

res.write(`event: thread.run.step.delta\n`);

res.write(`data: ${JSON.stringify(thinkingStep)}\n\n`);

let assistantMessage = { role: "assistant", content: "", tool_calls: [] };

let currentToolCall = null;

chunk = {

"id": "chatcmpl-123",

"object": "chat.completion.chunk",

"created": 1694268190,

"model": "agent-model",

"choices": [{

"index": 0,

"delta": {

"role": "assistant",

"content": null,

"tool_calls": [{

"id": `tool_call-${Math.random().toString(36).substring(2, 15)}`,

"index": 0,

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\n\"location\": \"Berlin\"\n}"

}

}]

},

"logprobs": null,

"finish_reason": "tool_calls"

}

]

}

const delta = chunk.choices[0]?.delta;

if (delta.content) {

assistantMessage.content += delta.content;

const messageDelta = {

id: `msg-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.message.delta',

thread_id: threadId,

model: chunk.model,

created: Math.floor(Date.now() / 1000),

choices: [{

delta: {

role: 'assistant',

content: delta.content

}

}]

};

res.write(`event: thread.message.delta\n`);

res.write(`data: ${JSON.stringify(messageDelta)}\n\n`);

}

if (delta.tool_calls && delta.tool_calls.length > 0) {

const toolCallDelta = delta.tool_calls[0];

if (toolCallDelta.index === 0 && toolCallDelta.id) {

currentToolCall = {

id: toolCallDelta.id,

type: "function",

function: {

name: "",

arguments: ""

}

};

assistantMessage.tool_calls.push(currentToolCall);

}

if (currentToolCall) {

if (toolCallDelta.function?.name) {

currentToolCall.function.name = toolCallDelta.function.name;

}

if (toolCallDelta.function?.arguments) {

currentToolCall.function.arguments += toolCallDelta.function.arguments;

}

}

}

if (chunk.choices[0]?.finish_reason === "tool_calls") {

for (const toolCall of assistantMessage.tool_calls) {

const toolCallStep = {

id: `step-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.run.step.delta',

thread_id: threadId,

model: chunk.model,

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

step_details: {

type: 'tool_calls',

tool_calls: [

{

id: toolCall.id,

name: toolCall.function.name,

args: JSON.parse(toolCall.function.arguments)

}

]

}

}

}

]

};

res.write(`event: thread.run.step.delta\n`);

//res.write(`event: tool_call\n`);

res.write(`data: ${JSON.stringify(toolCallStep)}\n\n`);

}

const updatedMessages = [...messages, assistantMessage];

for (const toolCall of assistantMessage.tool_calls) {

try {

const { id, function: { name, arguments: argsString } } = toolCall;

const args = JSON.parse(argsString);

const result = await toolFunctions[name](args);

const toolResponseStep = {

id: `step-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.run.step.delta',

thread_id: threadId,

model: chunk.model,

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

step_details: {

type: 'tool_response',

content: result,

name: name,

tool_call_id: id

}

}

}

]

};

res.write(`event: thread.run.step.delta\n`);

res.write(`data: ${JSON.stringify(toolResponseStep)}\n\n`);

updatedMessages.push({

role: "tool",

tool_call_id: id,

name: name,

content: result

});

} catch (error) {

console.error(`Error executing tool ${toolCall.function.name}:`, error);

const errorResponseStep = {

id: `step-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.run.step.delta',

thread_id: threadId,

model: chunk.model,

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

step_details: {

type: 'tool_response',

content: JSON.stringify({ error: error.message }),

name: toolCall.function.name,

tool_call_id: toolCall.id

}

}

}

]

};

res.write(`event: thread.run.step.delta\n`);

res.write(`data: ${JSON.stringify(errorResponseStep)}\n\n`);

updatedMessages.push({

role: "tool",

tool_call_id: toolCall.id,

name: toolCall.function.name,

content: JSON.stringify({ error: error.message })

});

}

}

content = updatedMessages[updatedMessages.length - 1].content

contentJSON = JSON.parse(content)

const finalChunk = {

"id": "xxxxxasdrfawe235235adf",

"object": "chat.completion",

"created": 1741569952,

"model": "granite",

"choices": [

{

"index": 0,

"delta": {

"role": "assistant",

"content": "The temperature is " + contentJSON.temperature + " degrees.",

"refusal": null,

"annotations": []

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 19,

"completion_tokens": 10,

"total_tokens": 29,

"prompt_tokens_details": {

"cached_tokens": 0,

"audio_tokens": 0

},

"completion_tokens_details": {

"reasoning_tokens": 0,

"audio_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

}

},

messages: updatedMessages

}

console.log("Niklas weather-agent.js v6 /v1/chat before ouput: messages")

for (message of updatedMessages) {

console.log(message)

toolCalls = JSON.stringify(message.tool_calls)

if (toolCalls) console.log("Details tool_calls: " + toolCalls)

}

const messageDelta = {

id: `msg-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.message.delta',

thread_id: threadId,

model: finalChunk.model,

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

content: finalChunk.choices[0].delta.content

}

}

]

};

res.write(`event: thread.message.delta\n`);

res.write(`data: ${JSON.stringify(messageDelta)}\n\n`);

}

res.end();

} catch (error) {

console.error("Error in streamResponseWithTools:", error);

const errorMessage = {

id: `error-${Math.random().toString(36).substring(2, 15)}`,

object: 'thread.message.delta',

thread_id: threadId,

model: 'agent-model',

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

content: `An error occurred: ${error.message}`

}

}

]

};

res.write(`event: thread.message.delta\n`);

res.write(`data: ${JSON.stringify(errorMessage)}\n\n`);

res.end();

}

}

Travel Agent

The travel agent has no tool and returns only the final answer.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

const express = require('express');

const app = express();

app.use(express.json());

const PORT = process.env.PORT || 3005;

app.listen(PORT, () => {

console.log(`travel-agent.js server running on port ${PORT}`);

});

app.post('/v1/chat', async (req, res) => {

try {

const { messages, stream = false } = req.body;

console.log("Niklas travel-agent.js v6 /v1/chat input: messages")

for (message of messages) {

console.log(message)

toolCalls = JSON.stringify(message.tool_calls)

if (toolCalls) console.log("Details tool_calls: " + toolCalls)

}

const threadId = req.headers['x-thread-id'] || 'default';

if (stream) {

res.setHeader('Content-Type', 'text/event-stream');

res.setHeader('Cache-Control', 'no-cache');

res.setHeader('Connection', 'keep-alive');

await streamResponse(res, messages, threadId);

} else {

const response = await processMessages(messages, threadId);

res.json(response);

}

} catch (error) {

console.error('Error processing chat request:', error);

res.status(500).json({ error: 'Internal server error' });

}

});

app.get('/v1/agents', (req, res) => {

console.log("Niklas travel-agent.js /v1/agents invoked")

res.json({

agents: [

{

name: 'Travel Agent',

description: 'A travel agent that helps with everything related to travel and can advise what to do at specific locations.',

provider: {

organization: 'Niklas Heidloff',

url: 'https://heidloff.net'

},

version: '1.0.0',

capabilities: {

streaming: true

}

}

]

});

});

async function processMessages(messages, threadId) {

const response = {

id: generateId(),

object: 'chat.completion',

created: Math.floor(Date.now() / 1000),

model: 'agent-model',

choices: [

{

index: 0,

message: {

role: 'assistant',

content: 'You should check out the Brandenburger Tor.'

},

finish_reason: 'stop'

}

],

usage: {

prompt_tokens: 0,

completion_tokens: 0,

total_tokens: 0

}

};

return response;

}

async function streamResponse(res, messages, threadId) {

const thinkingStep = {

id: `step-${generateId()}`,

object: 'thread.run.step.delta',

thread_id: threadId,

model: 'agent-model',

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

step_details: {

type: 'thinking',

content: 'Analyzing the user request and determining the best approach...'

}

}

}

]

};

res.write(`event: thread.run.step.delta\n`);

res.write(`data: ${JSON.stringify(thinkingStep)}\n\n`);

await new Promise(resolve => setTimeout(resolve, 1000));

const messageChunk = {

id: `msg-${generateId()}`,

object: 'thread.message.delta',

thread_id: threadId,

model: 'agent-model',

created: Math.floor(Date.now() / 1000),

choices: [

{

delta: {

role: 'assistant',

content: 'You should check out the Brandenburger Tor.'

}

}

]

};

console.log("Niklas travel-agent.js v6 /v1/chat before ouput: messages")

for (message of messages) {

console.log(message)

toolCalls = JSON.stringify(message.tool_calls)

if (toolCalls) console.log("Details tool_calls: " + toolCalls)

}

res.write(`event: thread.message.delta\n`);

res.write(`data: ${JSON.stringify(messageChunk)}\n\n`);

res.end();

}

function generateId() {

return Math.random().toString(36).substring(2, 15);

}

External Agents

The two example agents can be developed and tested locally via node:

package.json:

1

2

3

4

5

{

"dependencies": {

"express": "5.1.0"

}

}

Dockerfile:

1

2

3

4

5

WORKDIR /app

COPY package.json /app

RUN npm install

COPY . /app

CMD ["node","weather-agent.js"]

Commands to run the agent locally:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

podman build -t weather-agent-mac:6 .

podman ps -a | grep 3005

podman rm -f ...

podman run -it -p 3005:3005 weather-agent-mac:6

curl -XPOST -H 'Authorization: Bearer xxx' -H "Content-type: application/json" -d '{

"model": "granite",

"stream": true,

"messages": [

{

"role": "user",

"content": "How is the weather in Berlin?"

}

]

}' 'http://localhost:3005/v1/chat'

The command returns the following result:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

weather-agent.js server running on port 3005

Niklas weather-agent.js v6 /v1/chat input: messages

{ role: 'user', content: 'How is the weather in Berlin?' }

Niklas weather-agent.js v6 /v1/chat before ouput: messages

{ role: 'user', content: 'How is the weather in Berlin?' }

{

role: 'assistant',

content: '',

tool_calls: [

{

id: 'tool_call-2gjz8ws28sw',

type: 'function',

function: [Object]

}

]

}

Details tool_calls: [{"id":"tool_call-2gjz8ws28sw","type":"function","function":{"name":"get_weather","arguments":"{\n\"location\": \"Berlin\"\n}"}}]

{

role: 'tool',

tool_call_id: 'tool_call-2gjz8ws28sw',

name: 'get_weather',

content: '{"location":"Berlin","temperature":22,"condition":"sunny","humidity":45,"unit":"celsius"}'

}

Setup

To deploy the example scenario execute the following steps (replace my configuration with your information).

Deploy external agents on Code Engine

1

2

3

4

5

6

7

8

9

10

11

12

brew install ibmcloud

ibmcloud plugin install container-registry

ibmcloud login --sso

ibmcloud cr region-set global

ibmcloud target -g niklas

ibmcloud cr namespace-add niklas

ibmcloud target -r us-east

ibmcloud cr login

podman build --platform linux/amd64 -t weather-agent:6 .

podman tag weather-agent:6 icr.io/niklas/weather-agent:6

podman push icr.io/niklas/weather-agent:6

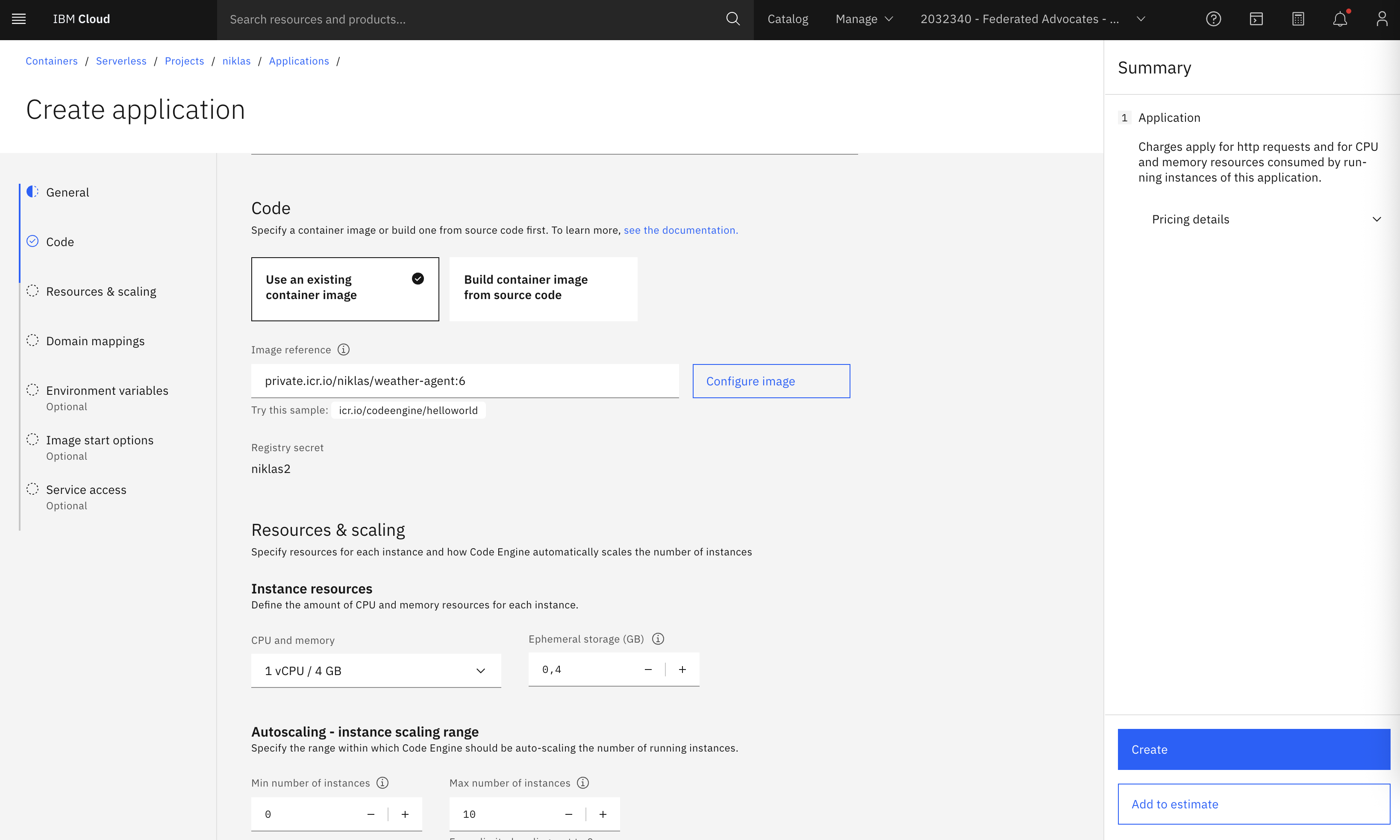

Create serveless Code Engine application ‘weather-agent’:

- Image: private.icr.io/niklas/weather-agent:6

- Min instances: 1

- Max instances: 1

- Listening port: 3005

Test the endpoint:

1

2

3

curl https://weather-agent.xxx.us-east.codeengine.appdomain.cloud/v1/agents

{"agents":[{"name":"Weather Agent","description":"A weather agent that knows about temperatures in specific locations.","provider":{"organization":"Niklas Heidloff","url":"https://heidloff.net"},"version":"1.0.0","capabilities":{"streaming":true}}]}

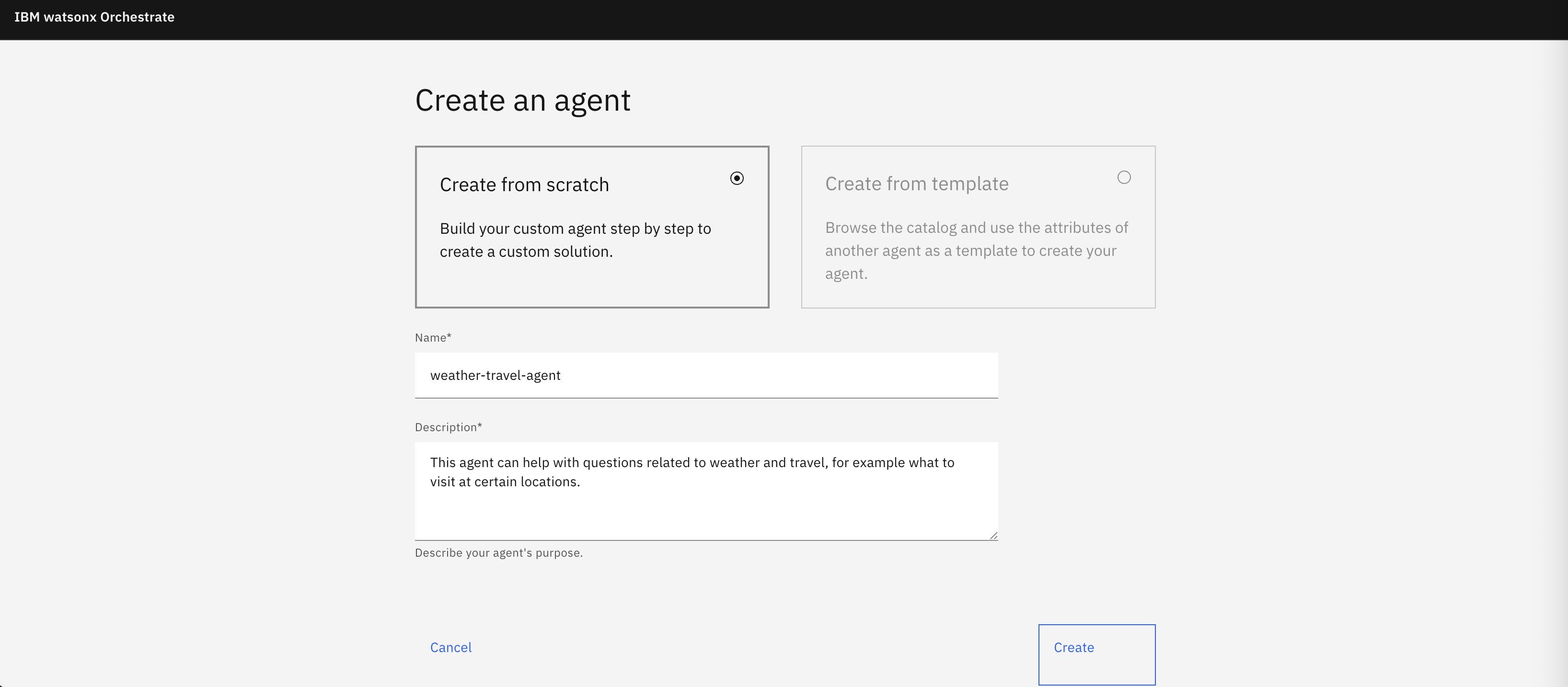

Create an agent and import the two external agents in Orchestrate

Create new agent:

1

2

Name: weather-travel-agent

Description: This agent can help with questions related to weather and travel, for example what to visit at certain locations.

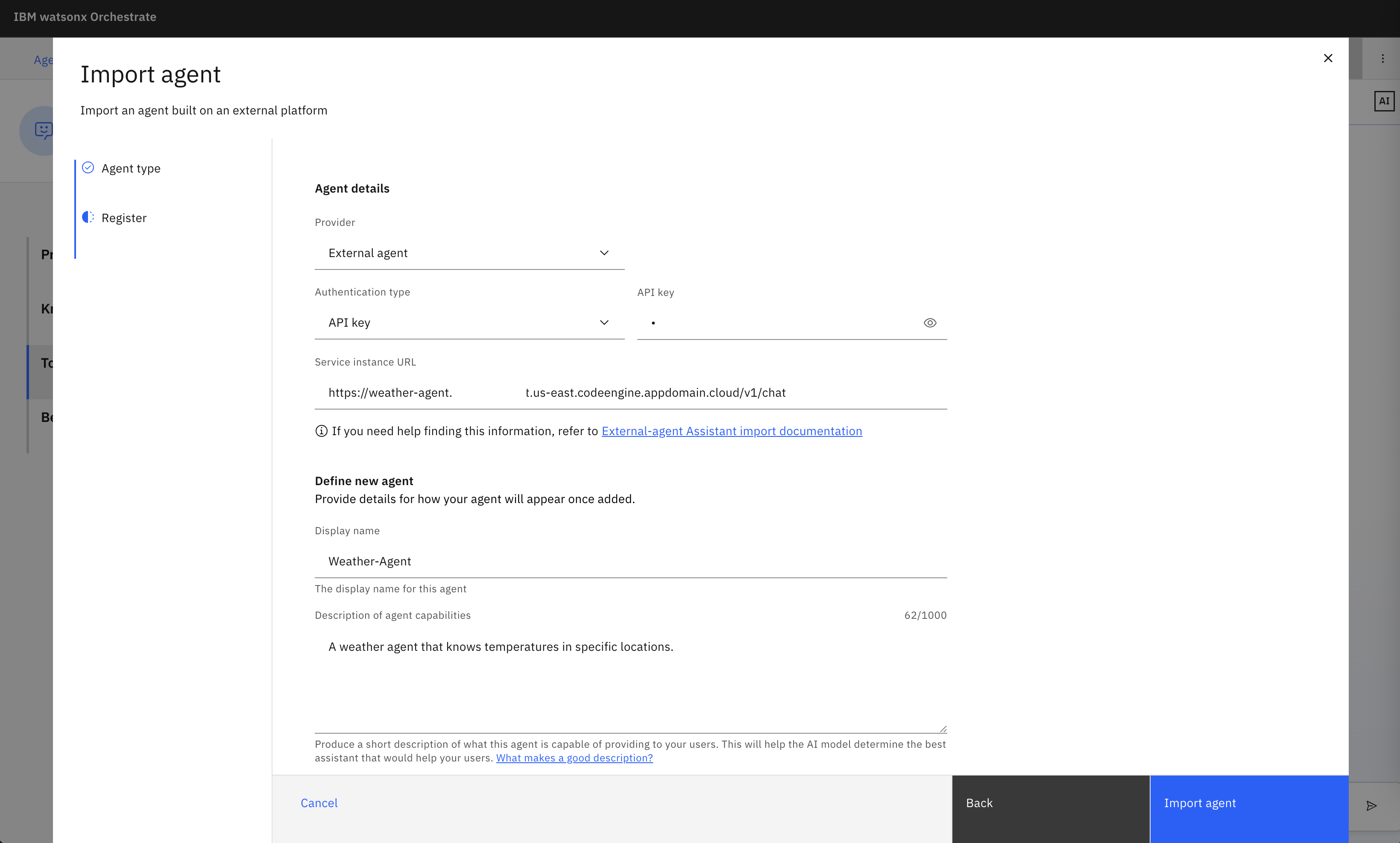

Import external agents:

1

2

3

4

API key: x

URL: https://weather-agent.1vdiu694k1et.us-east.codeengine.appdomain.cloud/v1/chat

Name: Weather-Agent

Description: A weather agent that knows temperatures in specific locations.

Next Steps

To learn more, check out the watsonx Orchestrate documentation and the watsonx Orchestrate Agent Development Kit landing page.