IBM Watson provides services to enable cognitive functionality in your own applications. In this article I describe on a high level how to ‘mix’ some of these services to build question and answers systems.

To understand a sample scenario of a cognitive question and answer system watch this two minutes video “Pepper, the robot“.

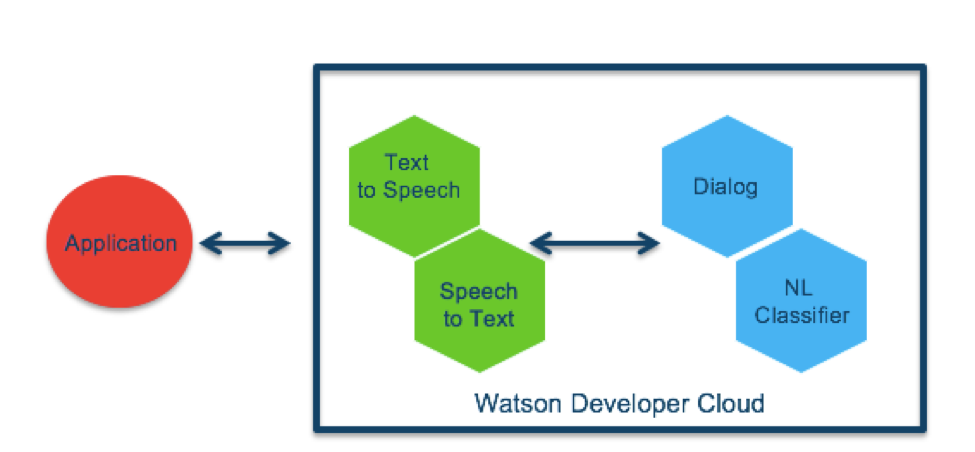

In order to build question and answer systems you can use Watson services as described by Rahul A. Garg. For speech input and output Watson and IBM Bluemix provide Speech to Text and Text to Speech services. For the conversations the Dialog and the Natural Language Classifier services can be used.

The dialog and classifier services can be used separately but I think the combination of both of them is very powerful. With the dialog service (simple sample) you define the flow of the conversation, with the classifier service (simple sample) you can understand more input from users that is not explicitly defined in the dialog definition. In order to demonstrate this I use below the What’s In Theaters application which comes in two versions. The first version uses the dialog service only, the second version additionally the classifier service.

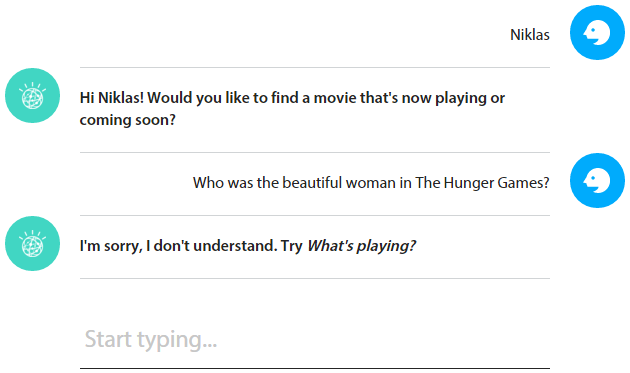

Version 1 – only dialog service

This screenshot shows what the dialog service returns if no classifier is used. Since the question from the user is not defined in the dialog definition, the application displays that it cannot understand the question.

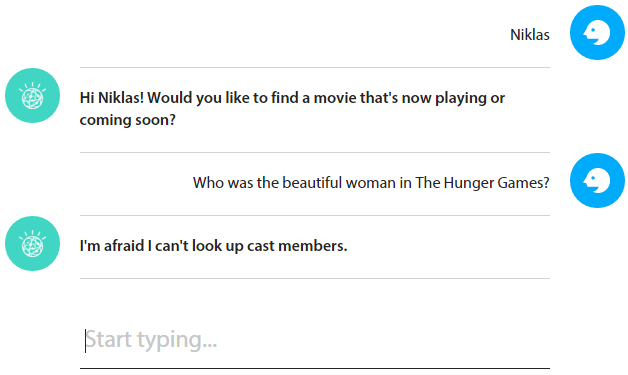

Version 2 – dialog and classifier services

The next screenshot shows the second version of the application which uses additionally the classifier. Now the application understands that the question was about actors because the class LookupActors was defined in the classifier’s training data.

Without going in too much detail, here is a high level description of how this can be developed. For a short introduction of the dialog service check out this sample, for an introduction of the classifier service this sample.

The Java application invokes the classifier service every time it receives input from users and stores the two most likely classes and confidence levels in variables.

1

2

3

4

5

6

7

8

Classification classification = nlcService.classify(classifier_id, input);

classInfo = classification.getClasses();

List<NameValue> nameValues = new ArrayList<NameValue>();

nameValues.add(new NameValue("Class1", classInfo.get(0).getName()));

nameValues.add(new NameValue("Class1_Confidence", Double.toString(classInfo.get(0).getConfidence())));

nameValues.add(new NameValue("Class2", classInfo.get(1).getName()));

nameValues.add(new NameValue("Class2_Confidence", Double.toString(classInfo.get(1).getConfidence())));

dialogService.updateProfile(dialog_id, Integer.parseInt(clientId), nameValues);

In the definition of the dialog the class names are used as user input if the confidence levels are high enough.

1

2

3

4

5

6

7

8

9

10

11

12

<if matchType="ANY" id="profileCheck_2531803">

<cond varName="Class1_Confidence" operator="GREATER_THEN">0.90</cond>

<cond varName="Class1_Confidence" operator="EQUALS">0.90</cond>

<output>

<prompt/>

<action varName="Defaulted_Previous" operator="SET_TO_YES"/>

<action varName="User_Input" operator="APPEND">~{Class1}</action>

<goto ref="search_2414738">

<action varName="User_Input" operator="SET_AS_USER_INPUT"/>

</goto>

</output>

</if>

The class names can then be used in the grammar of input nodes.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

<folder label="BASE SEQUENCES" id="folder_2414701">

<action varName="Small_Talk_Count" operator="SET_TO">0</action>

<action varName="Greeting_Count" operator="SET_TO">0</action>

<input isAutoLearnCandidate="true" isRelatedNodeCandidate="true" comment="LookupActors ...">

<grammar>

<item>LookupActors</item>

<item>I want to look up actors</item>

<item>look up actors</item>

<item>* LookupActors</item>

</grammar>

<action varName="Defaulted_Previous" operator="SET_TO_NO"/>

<output>

<prompt selectionType="RANDOM">

<item>I'm afraid I can't look up cast members.</item>

</prompt>

<goto ref="getUserInput_2531765"/>

</output>

</input>

</folder>