watsonx Assistant and watsonx Orchestrate can access Large Language Models from watsonx.ai to build conversational experiences utilizing Generative AI capabilities via state-of-the-art models from Mistral, Meta and IBM. This blog post explains how to invoke models hosted on watsonx.ai via APIs.

Overview

There are several ways to use Generative AI and LLMs in Assistant and Orchtestrate, for example in Conversational Skills. One of the possibilities is covered below.

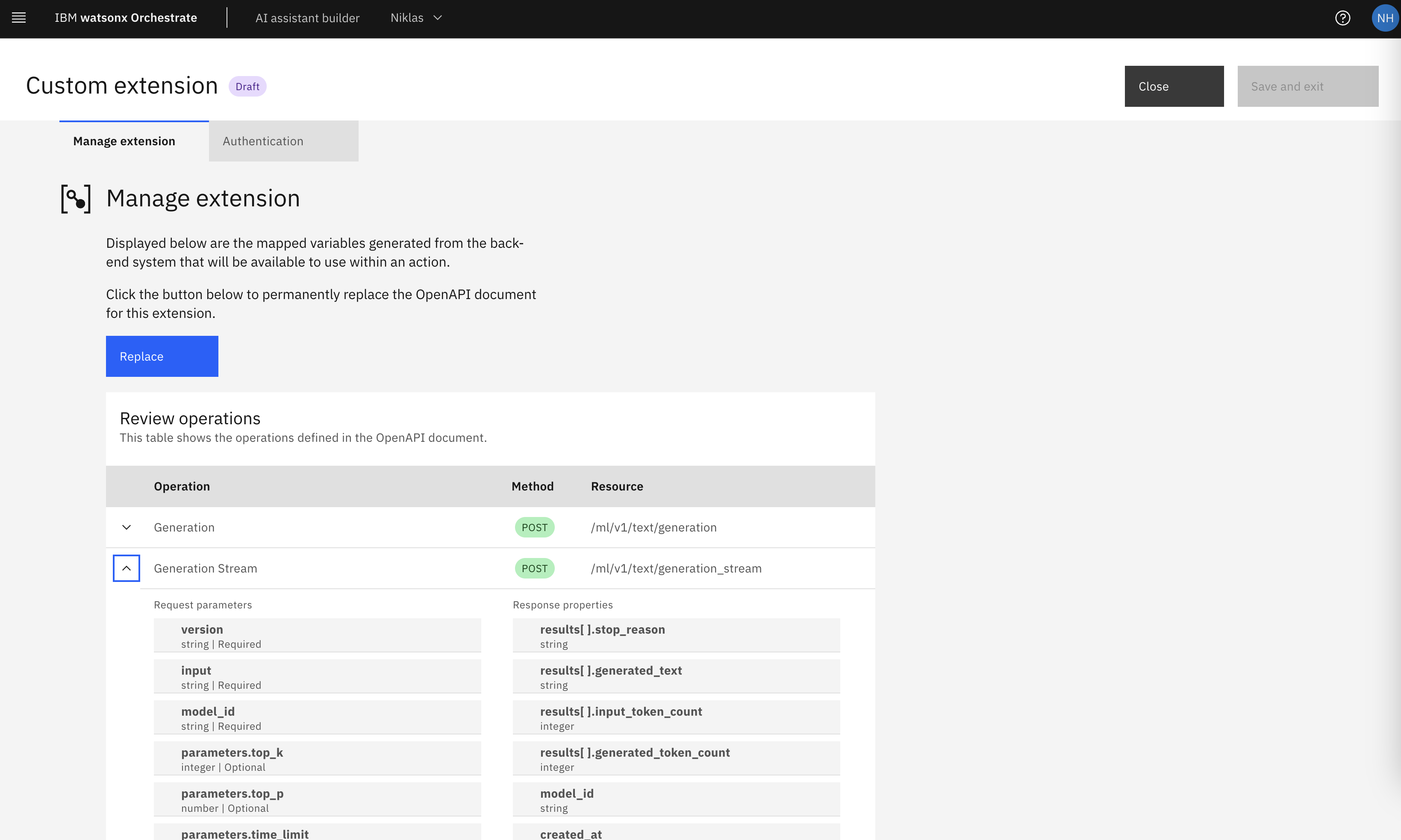

In watsonx Assistant and watsonx Orchestrate extensions can be registered that can be invoked via REST APIs when providing Swagger/OpenAPI specifications. This post describes how to leverage this capability for LLM inferences.

Detailed instructions and the Swagger API documentation can be found in the IBM watsonx Language Model Starter Kit.

Extensibility

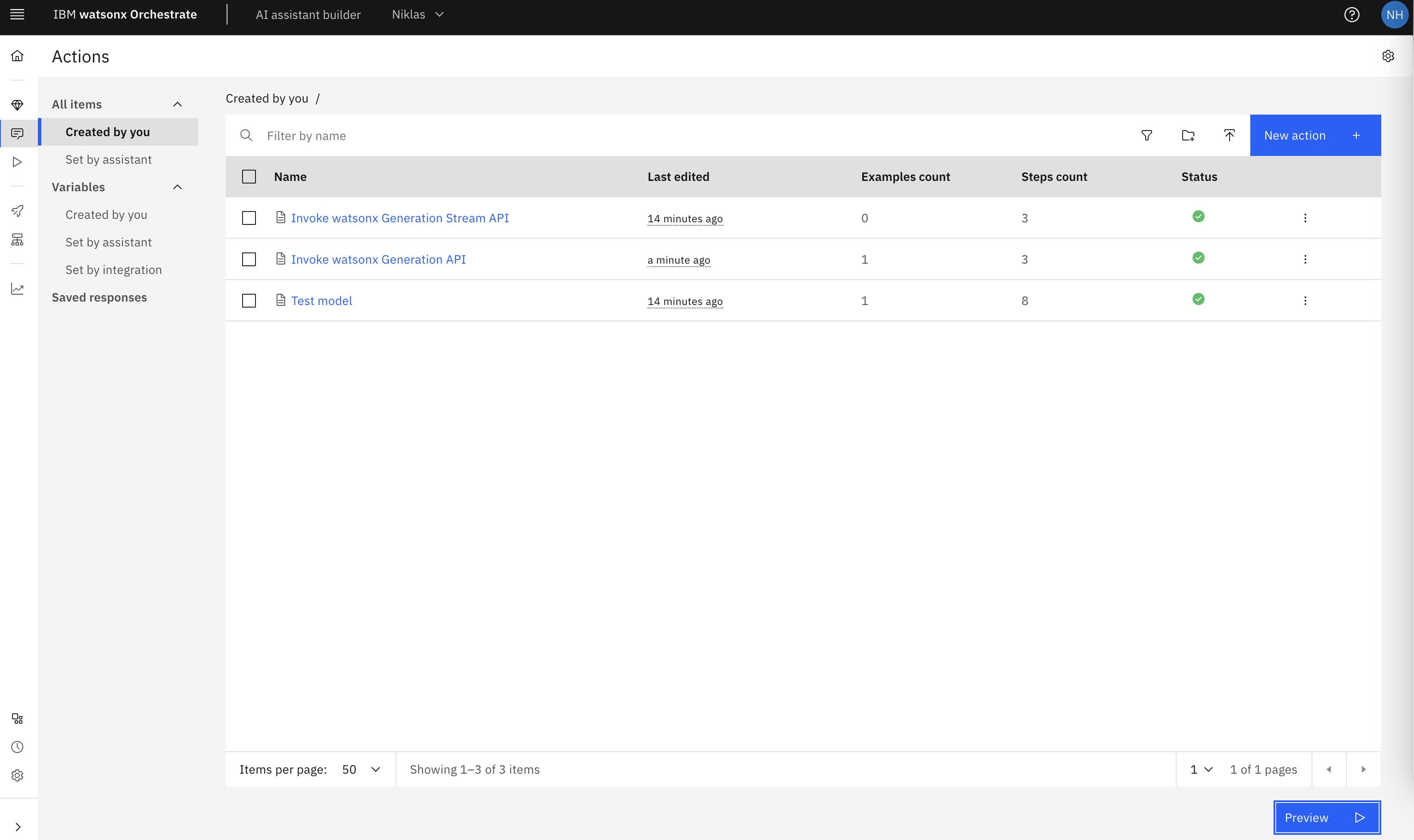

The extension needs to be installed, and actions can be imported.

Usage

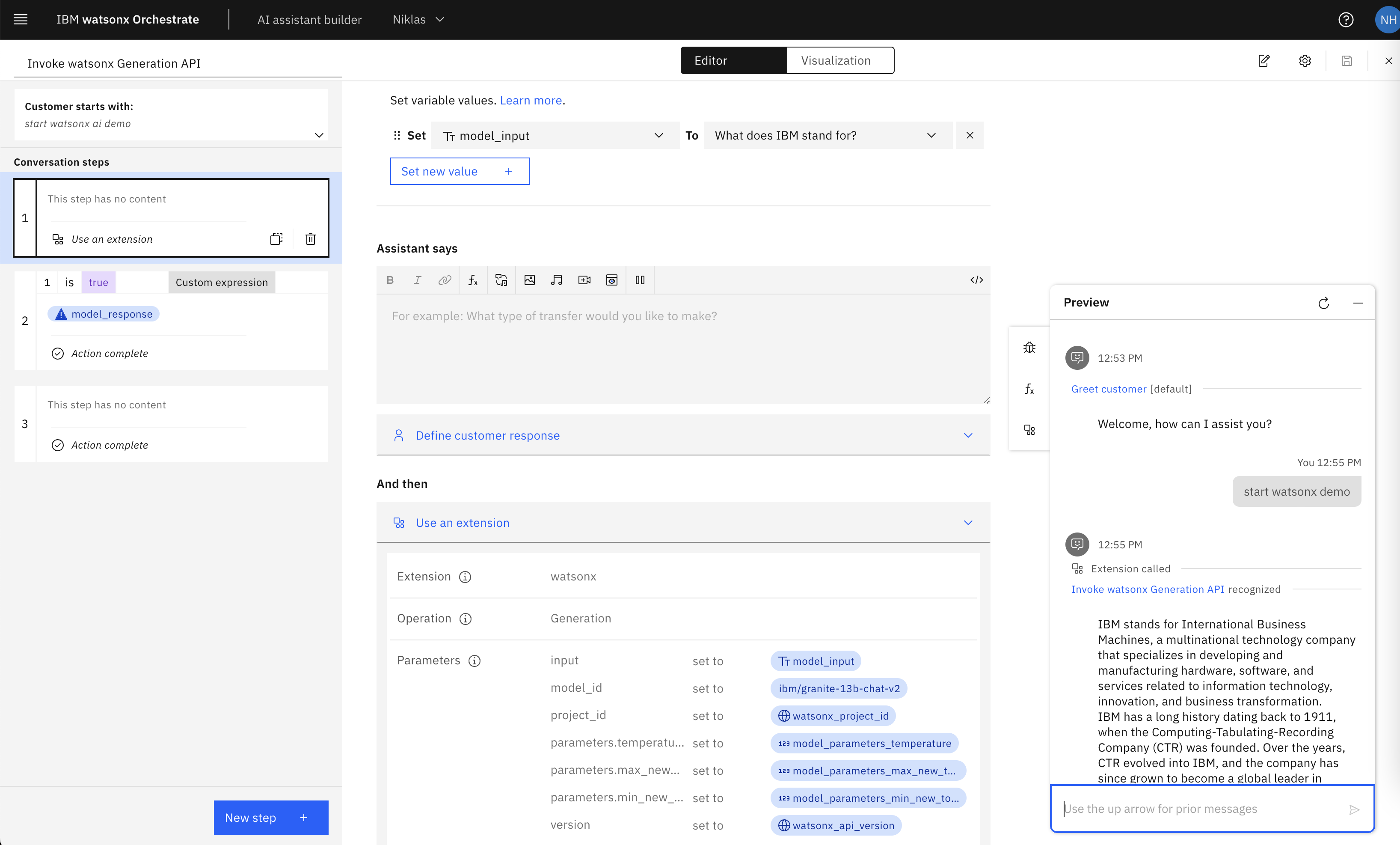

The usage of the extension is simple. Just configure the model’s name and watsonx.ai project id and define the prompt. There is one action for synchronous invocations and another one for streaming. See the screenshot at the top of this post.

Next Steps

To learn more, check out the watsonx Orchestrate landing page, the Watsonx.ai documentation and the Watsonx.ai home page.